Tutorial: Save 99% (Yes, 99%) Yearly by Managing Logs the AWS Way

Deploying Logging Agents with Bacalhau's Daemon Jobs

Welcome to another installation in our Bacalhau blog series! If you've followed our previous post on Save $2.5M Per Year by Managing Logs the AWS Way, you'll love what we've got today. We're going to walk you through setting up your very own log orchestration solution with Bacalhau. You'll learn to deploy logging agents as daemon jobs, aggregate key metrics, and more. The best part? We've prepared CDK code to automate the cluster setup on AWS. So, let's get started!

Pre-Processing Log Data Offers Several Benefits

In part 1 we have discussed how log pre-processing with Bacalhau provides a lot of benefits. To refresh our memories, the following were the main points.

Bandwidth Efficiency: Bacalhau cut the bandwidth for log transfers from 11GB to 800MB per host hourly – a ~93% reduction, maintaining data integrity and real-time metrics.

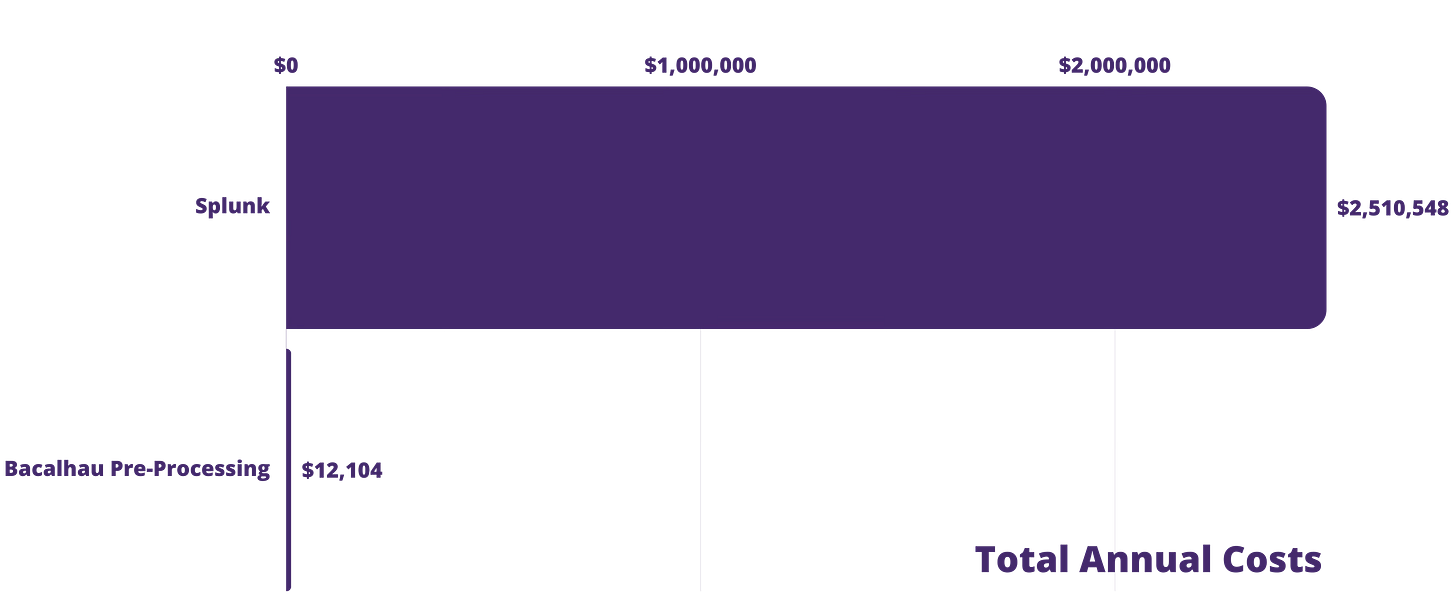

Cost Savings: Direct logs to a service like Splunk would cost roughly $2.5 million annually. Bacalhau’s approach? A mere $12k per year, marking a cost slash of over 99%.

Real-time Insights: Using aggregated data, we crafted detailed dashboards for monitoring web services, tracking traffic shifts, and spotting suspicious users.

Added Perks: With Bacalhau, benefits span faster threat detection using Kinesis, intricate batch tasks with raw logs in S3, and long-term storage in Glacier. Plus, you can keep using your go-to log visualization and alert tools.

In this part we will go over how this can be implemented. Let’s show you how!

Overview

We’re going to go through with four steps:

Setting up a sample cluster with the Bacalhau agents installed on them on AWS.

Installing and connecting your local Baclahau client to the deployed cluster.

Deploying a sample log generator

Deploying a log agent that sends aggregated logs to OpenSearch every 10 seconds and compresses raw logs to S3 hourly.

Prerequisites

Bacalhau CLI installed. If you haven't yet, follow this guide.

An installed and new version of the AWS CDK CLI. You can find more info here.

An active AWS account (any other cloud provider works too, but the setup commands will be different).

In this example, your cluster will include:

A Bacalhau orchestrator EC2 instance

Three EC2 instances as web servers running Bacalhau agents

An S3 bucket for raw log storage

An OpenSearch cluster with a pre-configured dashboard and visualizations

Step 1 - Setting Up Your Cluster

Sample Cluster Deployment with CDK

First, clone the GitHub repository:

git clone https://github.com/bacalhau-project/examples.git

cd log-orchestration/cdkNow, install the required Node.js packages:

npm installBootstrap your AWS account if you haven't used AWS CDK on your account already:

cdk bootstrapTo deploy your stack without SSH access, run:

cdk deployNeed SSH access to your hosts? Use this instead:

cdk deploy -c keyName=<Your_SSH_key_pair_name>Note: If you don't have an SSH key pair, follow these steps. Deployment will take a few minutes. Perfect time for a quick coffee break!

CDK Outputs

Once the stack is deployed, you'll receive these outputs:

OrchestratorPublicIp: Connect Bacalhau CLI to this IP.OpenSearchEndpoint: The endpoint for aggregated logs.AccessLogBucket: The S3 bucket for raw logs.OpenSearchDashboard: Access your OpenSearch dashboard here.OpenSearchPasswordRetriever: Retrieve OpenSearch master password with this command.

Accessing OpenSearch Dashboard

After your CDK stack is up and running, the OpenSearch dashboard URL will pop up in your console, courtesy of the CDK outputs. You'll hit a login page the first time you try to access the dashboard. No sweat, just use admin as the username.

To get your password, you don't have to hunt; CDK outputs include a handy command tailored for this. Just fire up your terminal and run:

aws secretsmanager get-secret-value --secret-id "<Secret ARN>" --query 'SecretString' --output textSwap <Secret ARN> with the actual ARN displayed in your CDK outputs.

Logged in successfully? Fantastic, let's proceed!

Step 2 - Access Bacalhau Network

First, we’ll setup Bacalhau locally on your machine. This is just one command:

curl -sL https://get.bacalhau.org/install.sh | bashBy default, the client will connect to the demo public network. To configure your Bacalhau CLI to your private cluster, execute:

export BACALHAU_NODE_CLIENTAPI_HOST=<OrchestratorPublicIp>Verify your setup with:

bacalhau node listYou should see three compute nodes labeled service=web-server along with the orchestrator node.

ID TYPE LABELS CPU MEMORY DISK GPU

QmUWYeTV Compute Architecture=amd64 Operating-System=linux 1.6 / 1.5 GB / 78.3 GB / 0 /

git-lfs=False name=web-server-1 service=web-server 1.6 1.5 GB 78.3 GB 0

QmVBdXFW Compute Architecture=amd64 Operating-System=linux 1.6 / 1.5 GB / 78.3 GB / 0 /

git-lfs=False name=web-server-3 service=web-server 1.6 1.5 GB 78.3 GB 0

QmWaRH4X Requester Architecture=amd64 Operating-System=linux

git-lfs=False

QmXVWfVT Compute Architecture=amd64 Operating-System=linux 1.6 / 1.5 GB / 78.3 GB / 0 /

git-lfs=False name=web-server-2 service=web-server 1.6 1.5 GB 78.3 GB 0Step 3 - Deploy Log Generator

We've prepared a log-generator.yaml file for deploying a daemon job to simulate web access logs.

Name: LogGenerator

Type: daemon

Namespace: logging

Constraints:

- Key: service

Operator: ==

Values:

- WebService

Tasks:

- Name: main

Engine:

Type: docker

Params:

Image: expanso/nginx-access-log-generator:1.0.0

Parameters:

- --rate

- "10"

- --output-log-file

- /app/output/application.log

InputSources:

- Target: /app/output

Source:

Type: localDirectory

Params:

SourcePath: /data/log-orchestration/logs

ReadWrite: true

What's Happening: The daemon job is deployed on all nodes with the label service=web-server. The job employs a Docker image that mimics Nginx access logs and stores them locally.

Deploy it like so:

bacalhau job run log-generator.yamlCheck the job's status:

bacalhau job executions <job_id>You should see three executions in your three web servers in Running state:

CREATED MODIFIED ID NODE ID REV. COMPUTE DESIRED COMMENT

STATE STATE

12:43:31 12:43:31 e-d5433aec QmUWYeTV 2 BidAccepte Running

d

12:43:31 12:43:31 e-a7410744 QmVBdXFW 2 BidAccepte Running

d

12:43:31 12:43:31 e-d6868bd2 QmXVWfVT 2 BidAccepte Running

dStep 4 - Deploy Logging Agent

Bacalhau’s versatility means that you can deploy any type of job, use any existing logging agent or implement your own. We'll use Logstash for this example.

Take a look at logstash.yaml:

Name: Logstash

Type: daemon

Namespace: logging

Constraints:

- Key: service

Operator: ==

Values:

- WebService

Tasks:

- Name: main

Engine:

Type: docker

Params:

Image: expanso/nginx-access-log-agent:1.0.0

EnvironmentVariables:

- OPENSEARCH_ENDPOINT={{.OpenSearchEndpoint}}

- S3_BUCKET={{.AccessLogBucket}}

- AWS_REGION={{.AWSRegion}}

- AGGREGATE_DURATION=10

- S3_TIME_FILE=60

Network:

Type: Full

InputSources:

- Target: /app/logs

Source:

Type: localDirectory

Params:

SourcePath: /data/log-orchestration/logs

- Target: /app/state

Source:

Type: localDirectory

Params:

SourcePath: /data/log-orchestration/state

ReadWrite: true

The job spec has placeholders for OpenSearchEndpoint, AccessLogBucket, and AWSRegion. Bacalhau leverages Go’s text/template for dynamic job specs, allowing CLI flags or environment variables as inputs. More details here.

Deploy your logging agent:

bacalhau job run logstash.yaml \

-V "OpenSearchEndpoint=<VALUE>" \

-V "AccessLogBucket=<VALUE>" \

-V "AWSRegion=<VALUE>"

Don't Forget: Update the variables according to your CDK outputs.

Logstash might need a few moments to get up and running. To keep tabs on its start-up progress, you can use:

bacalhau logs --follow <job_id>How the Logging Agent Works

In our setup, we're using the expanso/nginx-access-log-agent Docker image. Here's a quick rundown of what you get out of the box with this choice:

Raw Logs to S3: Every hour, compressed raw logs are sent to your specified S3 bucket. This is great for archival and deep-dive analysis.

Real-time Metrics to OpenSearch: The agent pushes aggregated metrics to OpenSearch every

AGGREGATE_DURATIONseconds (e.g., every 10 seconds).

You can learn more about our Logstash pipeline configuration and aggregation implementation here. These are a subset of the aggregated metrics published to OpenSearch:

Request Counts: Grouped by HTTP status codes.

Top IPs: Top 10 source IPs by request count.

Geo Sources: Top 10 geographic locations by request count.

User Agents: Top 10 user agents by request count.

Popular APIs & Pages: Top 10 most-hit APIs and pages.

Gone Pages: Top 10 requested but non-existent pages.

Unauthorized IPs: Top 10 IPs failing authentication.

Throttled IPs: Top 10 IPs getting rate-limited.

Data Volume: Total data transmitted in bytes.

This gives you real-time insights into traffic patterns, performance issues, and potential security risks. All of this is visible through your OpenSearch dashboards. After a while, your OpenSearch dashboard will be bursting with insights. Metrics on user behavior, traffic hotspots, error rates, you name it!

Conclusion

In an era where data is everything, efficient log management is crucial for any organization leveraging cloud computing. The methods shown here, enabled by the open-source project Bacalhau, represent significant advancements in handling vast volumes of log data. By embracing a distributed log orchestration approach, Bacalhau addresses the core challenges faced by traditional log vending methods – notably, the issues of volume, real-time processing needs, and cost efficiency.

Bacalhau's architecture, which intelligently combines daemon, service, batch, and ops jobs, not only streamlines the process of log management but also significantly reduces costs, as evidenced by our benchmarking results. The remarkable cost savings of over 99% compared to direct logging to services like Splunk, coupled with substantial bandwidth efficiency and the facilitation of real-time insights, make Bacalhau an exemplary solution in the realm of log management.

Moreover, the adaptability of Bacalhau across various platforms, be it AWS, Google Cloud, Azure, or on-premises systems, underscores its versatility and global applicability. Its design caters to the needs of a wide range of industries, ensuring that log management is both effective and scalable, regardless of the size or nature of the enterprise.

In conclusion, Bacalhau offers a robust, scalable, and cost-effective solution for log management. Our hope is that it can be leveraged to turn what was once a challenging and costly endeavor into an efficient, manageable process, paving the way for smarter, more efficient data handling in the cloud.

Commercial Support

While Bacalhau is Open Source software, the Bacalhau binaries go through the security, verification, and signing build process lovingly crafted by Expanso. You can read more about the difference between open source Bacalhau and commercially supported Bacalhau on our website. If you would like to use our pre-built binaries and receive commercial support, please contact us!

What’s Next?

Keep an eye out for part 3. We are committed to keep delivering groundbreaking updates and improvements and we're looking for help in several areas. If you're interested, there are several ways to contribute and you can always reach out to us via Slack or Email.

Bacalhau is available as Open Source Software and the public GitHub repo can be found here. If you like our software, please give us a star ⭐

If you need professional support from experts, you can always reach out to us via Slack or Email.