Save $2.5M Per Year by Managing Logs the AWS Way

How Bacalhau can save cost with data preprocessing (7 min)

Introduction

AWS is one of the largest deployments of compute services in the world. Like anyone of significant size, in order to maintain quality of service and reliability, they have to take as much signal from their services, often in the form of logs. Over four years, I served as the lead architect for AWS Vended Logs, a service designed to wrangle logs from various AWS services and funnel them to a destination of the customer's choosing. We had to handle petabytes of logs generated every hour across Amazon's massive global infrastructure supporting millions of customers.

Our users had diverse needs - real-time access to logs, long-term storage for compliance and efficient storage for large-scale batch processing - all without burning a hole in their pocket. The traditional approach, a centralized data lake, would have been prohibitively expensive, less efficient and a nightmare to operate. So, we went distributed. We processed logs right where they were generated and orchestrated multiple delivery streams tailored to specific needs, whether that be real-time access or optimized storage for batch processing or long-term retention.

Thinking of achieving this level of efficiency and cost-saving outside of AWS? With Bacalhau, you can!

Challenges in Traditional Log Vending

Organizations today juggle the need for detailed logs with the challenges of managing massive data volumes. Platforms like Splunk, Data Dog, and others offer rich features, but costs spike with increased data intake. Key log management challenges across industries include the following.

Volume vs. Value: Log ingestion involves write-intensive operations, but only a minor fraction of these logs are accessed or deemed valuable.

Real-time Needs: For real-time applications such as threat detection and health checks, only specific metrics are pivotal. Real-time metric solutions tend to be costlier and harder to oversee. Hence, early aggregation and filtering of these metrics are essential for both cost savings and operational scalability.

Operational Insights: At times, operators require access to application and server logs for immediate troubleshooting. This access should be facilitated without connecting directly to the numerous production servers producing these logs.

Archival Needs: Preserving raw logs is crucial for compliance, audits, and various analytical and operational improvement processes.

To address these varied needs, especially in real-time scenarios, many organizations resort to streaming all logs to a centralized platform or data warehouse. While this method can work, it often leads to spiraling costs and slower insights.

Solution: Distributed Log Orchestration

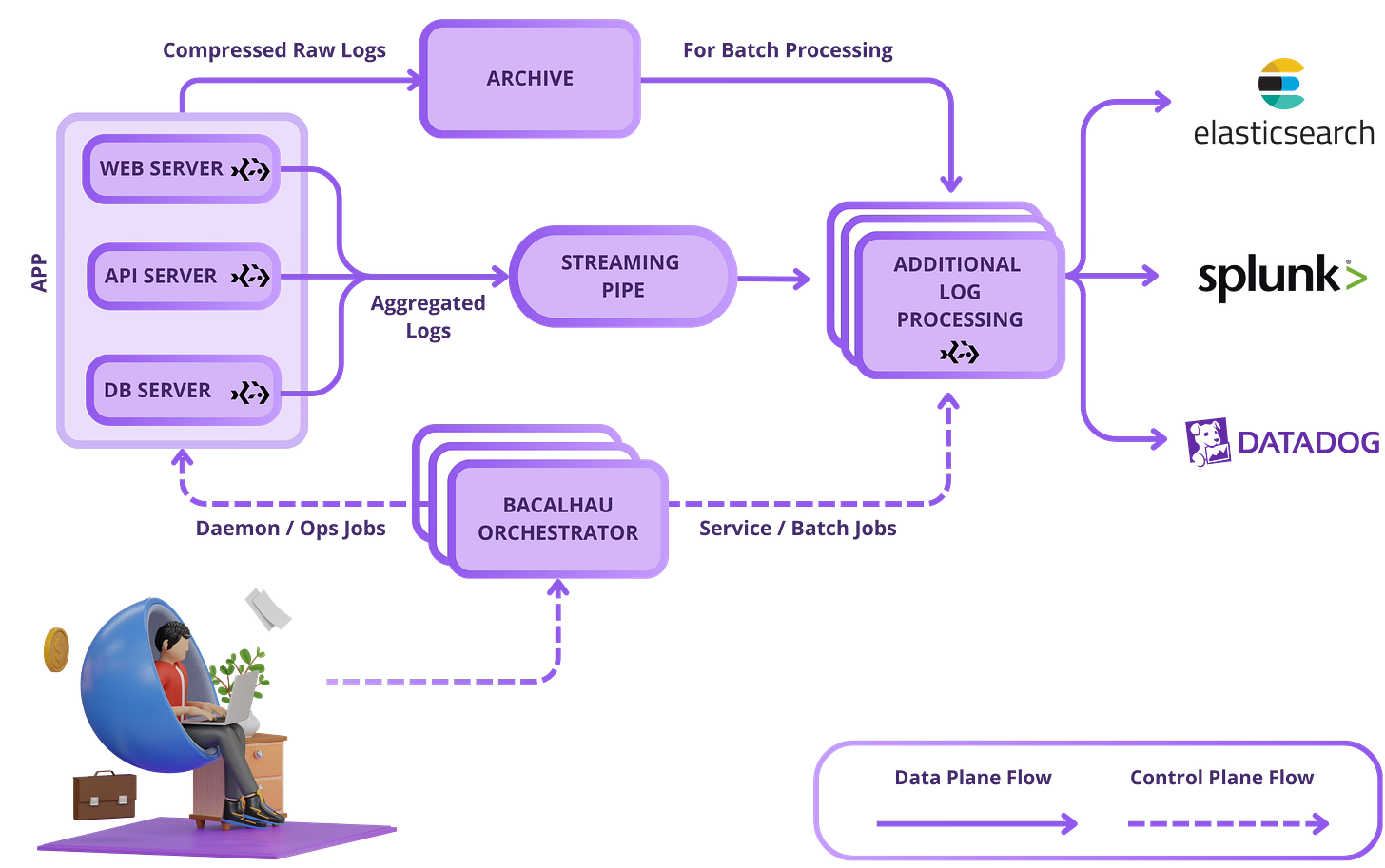

Bacalhau is a distributed compute framework that offers efficient log processing solutions, enhancing current platforms. Its strength lies in its adaptable job orchestration. Let's explore its multi-job approach.

Daemon Jobs:

Purpose: Bacalhau agents run continuously on the nodes doing the work (e.g., website, database, middleware, etc), auto-deployed by the orchestrator.

Function: These jobs handle logs at the source, aggregate, and compress them. They then send aggregated logs periodically to platforms like Kafka or Kinesis, or suitable logging services. Every hour, raw logs intended for archiving or batch processing are compressed and moved to places like S3.

Service Jobs:

Purpose: Handle continuous intermediate processing like log aggregation, basic statistics, deduplication, and issue detection. They run on a specified number of nodes, with Bacalhau ensuring their optimal performance and health.

Function: Continuous log processing and integration with logging services for instant insights, e.g., Splunk.

Batch Jobs:

Purpose: Executed on-demand on a designated number of nodes, with Bacalhau managing node selection, monitoring and failover.

Function: Operates intermittently on data in S3, focusing on in-depth investigations without moving the data, turning nodes into a distributed data warehouse.

Ops Jobs:

Purpose: Similar to batch jobs but spanning all nodes matching job selection criteria.

Function: Ideal for urgent investigations where time is critical. End users are granted limited, yet direct access to logs on host machines, avoiding any S3 transfer delays and ensuring rapid insights.

Bacalhau ensures log management is not only efficient but also responsive to changing business needs.

Bacalhau's Global Edge

Bacalhau is designed for global reach and reliability. Here's a snapshot of its worldwide log solution.

Local Log Transfers: Daemon jobs swiftly send logs to close-by storages like regional S3 or MinIO. These jobs stay active, even without Bacalhau connection, safeguarding data during outages.

Regional Log Handling: Autonomous service jobs in each region channel logs can transmit to regional logging entities for localized insights, a global logging platform for an overarching perspective, or a combination of both.

Smart Batch Operations: Bacalhau guides batch jobs to nearby data sources, cutting network costs and streamlining global tasks.

Ops Job Flexibility: Based on permissions, operators can target specific hosts, regions, or the entire network for queries.

With Bacalhau, global log management is both efficient and user-friendly, marrying the perks of decentralization with centralized clarity.

Benchmarking

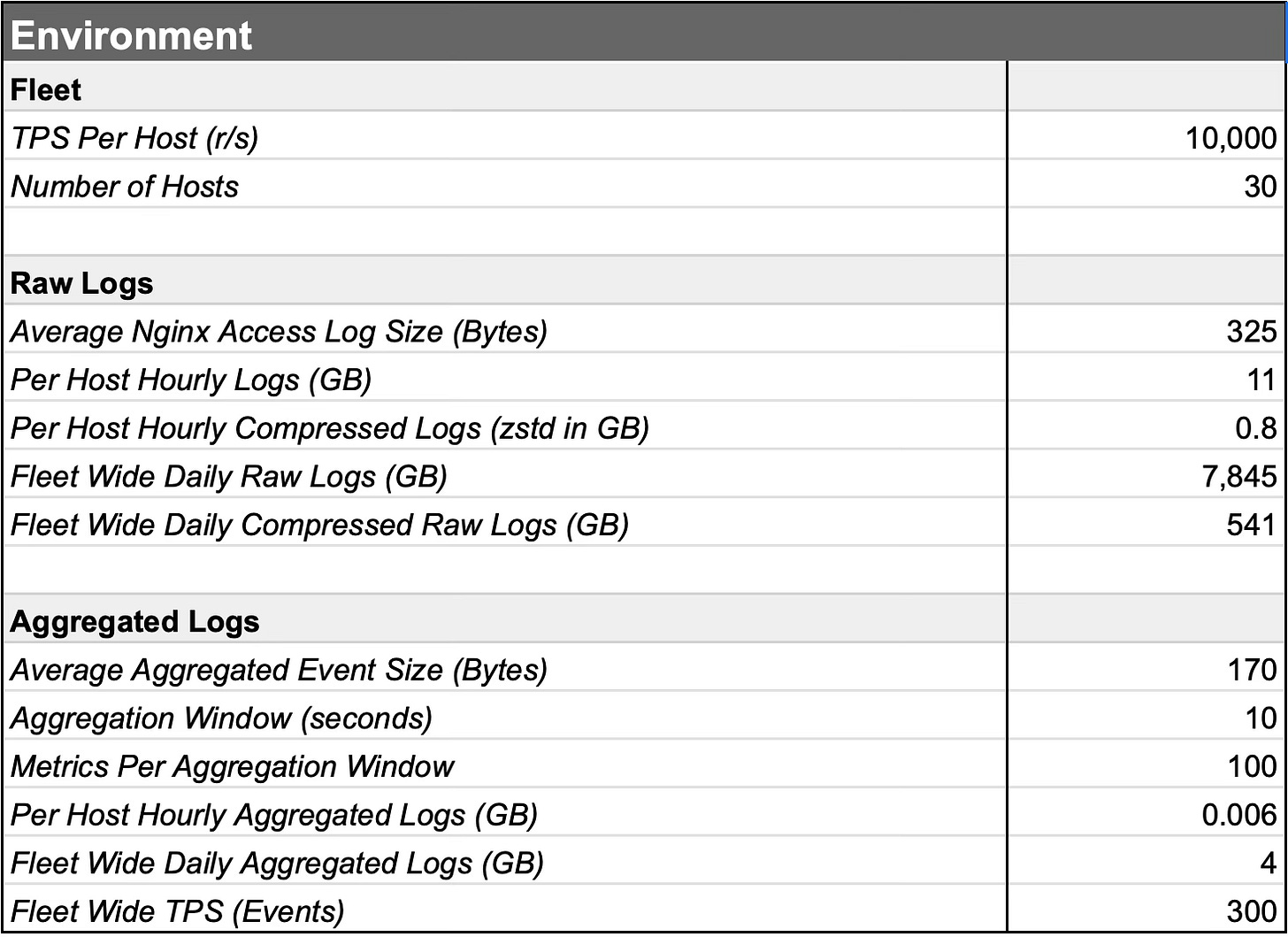

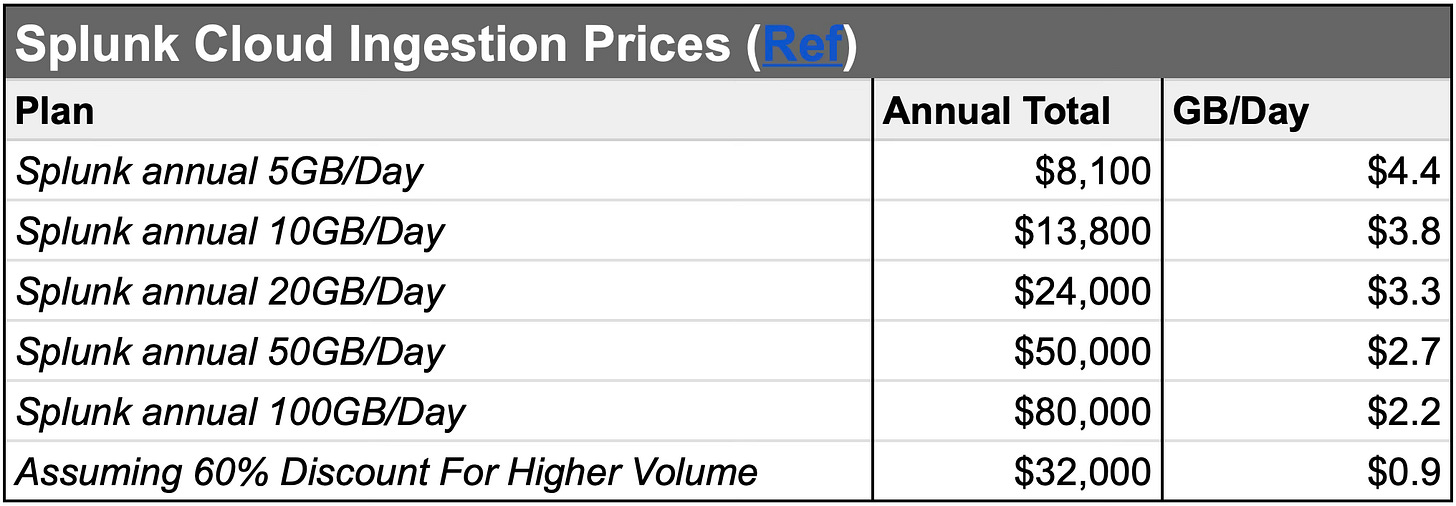

In the following we have calculated the cost for a case where one uploads the data directly to a log management service, like Splunk, and processes the data there. In the second scenario, data is preprocessed on-device, streamed aggregated and reduced data to Splunk, and published raw compressed logs to S3. Changing the way you manage your logs can result in significant cost savings.

Ref link from table above.

Log Context: We benchmarked using web server access logs, using common tools to monitor server health, user activity, and security. Our goal was to mirror real-world situations for wide applicability.

Setup and Capacity: We simulated 30 web servers across multiple zones, each handling about 10k TPS (Transactions Per Second) of web requests. This provides a solid foundation for gauging Bacalhau's log processing prowess.

Platform Considerations: Our tests were on AWS, but Bacalhau's flexibility means similar results on Google Cloud, Azure, or on-premises systems.

Caveat: Your mileage may vary depending on the specific types of logs, the volume, and your existing infrastructure. You can use this calculator to get an estimate of your cost saving.

Main Takeaways

Bandwidth Efficiency: Bacalhau cut the bandwidth for log transfers from 11GB to 800MB per host hourly – a ~93% reduction, maintaining data integrity and real-time metrics.

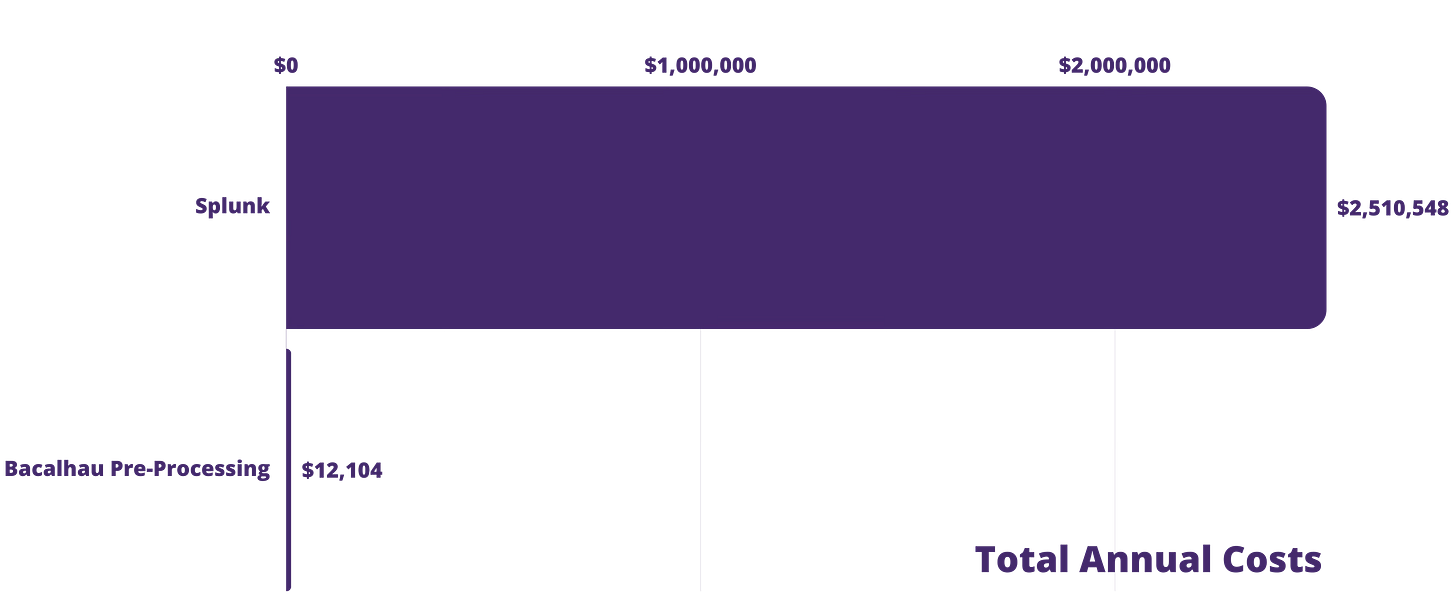

Cost Savings: Direct logs to a service like Splunk would cost roughly $2.5 million annually. Bacalhau’s approach? A mere $12k per year, marking a cost slash of over 99%.

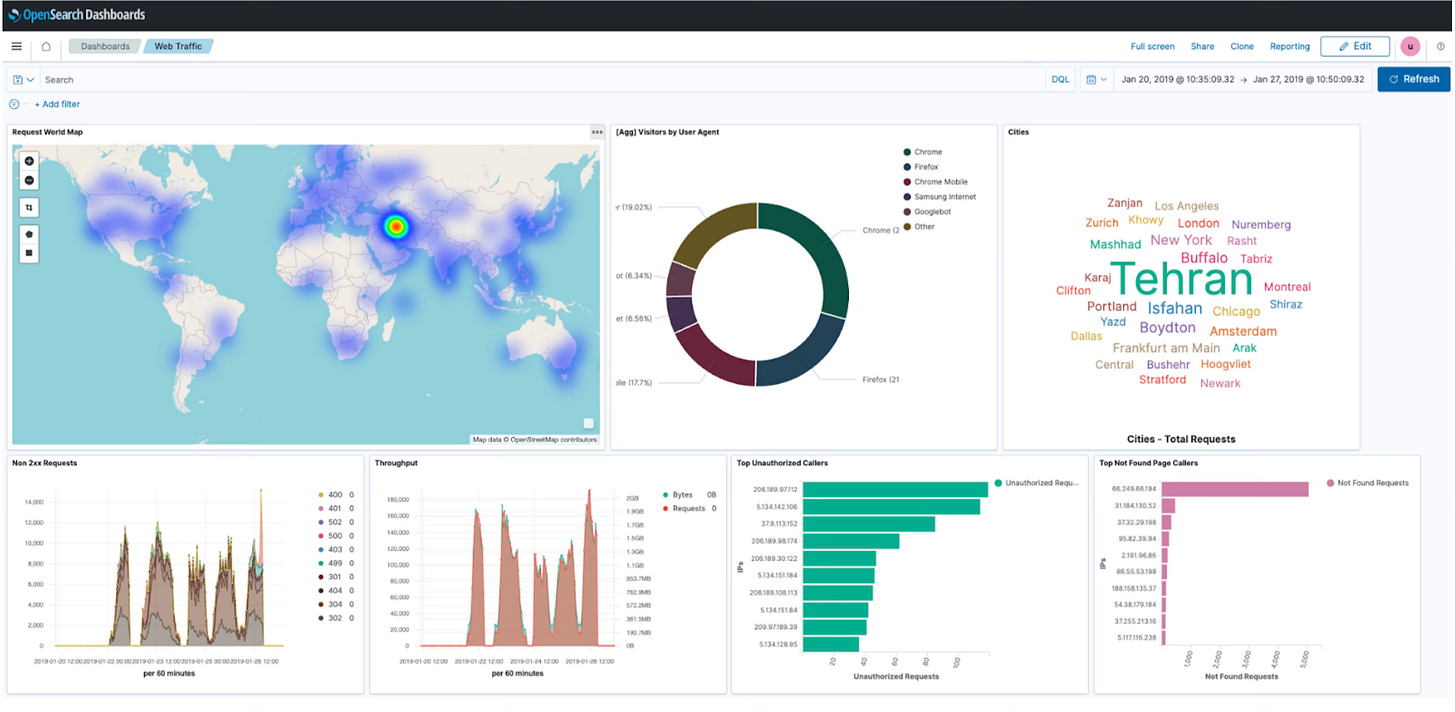

Real-time Insights: Using aggregated data, we crafted detailed dashboards for monitoring web services, tracking traffic shifts, and spotting suspicious users.

Added Perks: With Bacalhau, benefits span faster threat detection using Kinesis locality, intricate batch tasks with raw logs in S3, and long-term storage in Glacier. Plus, you can keep using your go-to log visualization and alert tools.

Costs Summary

With Bacalhau, setting up a robust log management system is pretty much a walk in the park. But, we're just scratching the surface here. The framework's adaptability and resilience make it a must-have tool for any enterprise aiming to keep their log data in check.

By decentralizing the log processing system, Bacalhau not only vastly reduces operational costs – as evidenced by our benchmarking results – but also ensures real-time data processing and compliance with archival needs. Its seamless integration across various cloud platforms, including AWS, Azure, and Google Cloud, demonstrates our commitment to versatile, cross-platform solutions.

Commercial Support

While Bacalhau is Open Source software, the Bacalhau binaries go through the security, verification, and signing build process lovingly crafted by Expanso. You can read more about the difference between open source Bacalhau and commercially supported Bacalhau in our FAQ. If you would like to use our pre-built binaries and receive commercial support, please contact us!

What’s Next?

Keep an eye out for our code implementation example in part 2, our deep dive into historic log analysis with Bacalhau batch jobs in part 3 and find out how ops jobs are uniquely designed for straightforward real-time analysis in part 4. You can learn more about our Logstash pipeline configuration and aggregation implementation here.

And stay tuned - we've got a bunch of new content rolling out soon, including step-by-step tutorials and code snippets to jump-start your own log management adventure with Bacalhau. Get ready to level up and find it here.

We are committed to keep delivering groundbreaking updates and improvements and we're looking for help in several areas. If you're interested, there are several ways to contribute and you can always reach out to us via Slack or Email.

Bacalhau is available as Open Source Software, you can download it for free. The public GitHub repo can be found here.

If you like our software, please give us a star ⭐

That is some really great stuff.