Bacalhau 1.0: Unlocking The Potential of Private Data

New simple job and data moderation features in Bacalhau 1.0 unlock new data sharing, federated learning and compute islands using private data.

This post is based on a talk I gave at CoD Summit^3 in Boston, May 2023.

Bacalhau revolutionizes the data handling landscape by powering data-local computation: we send code to run analysis where the data is rather than moving the data to the code. By keeping data in place and allowing authorised, audited, and controlled computation over it, more data can be used with less risk of misuse. This is the answer to data governance woes. With data volumes growing 45% faster than network bandwidth and 57% of data living outside the cloud or traditional data centers, moving data is too slow and expensive for any organization operating at scale.

There is another good reason for keeping data where it is: control. Whether it’s through mandated regulation like Health Insurance Portability and Accountability Act (HIPAA) or General Data Protection Regulation (GDPR) or more local protection over sensitive financials or company secrets, nearly 100% of data is under some form of governance. Moving data to computation takes it out of the secure, safe domain where it normally lives and increases the risk it is misused.

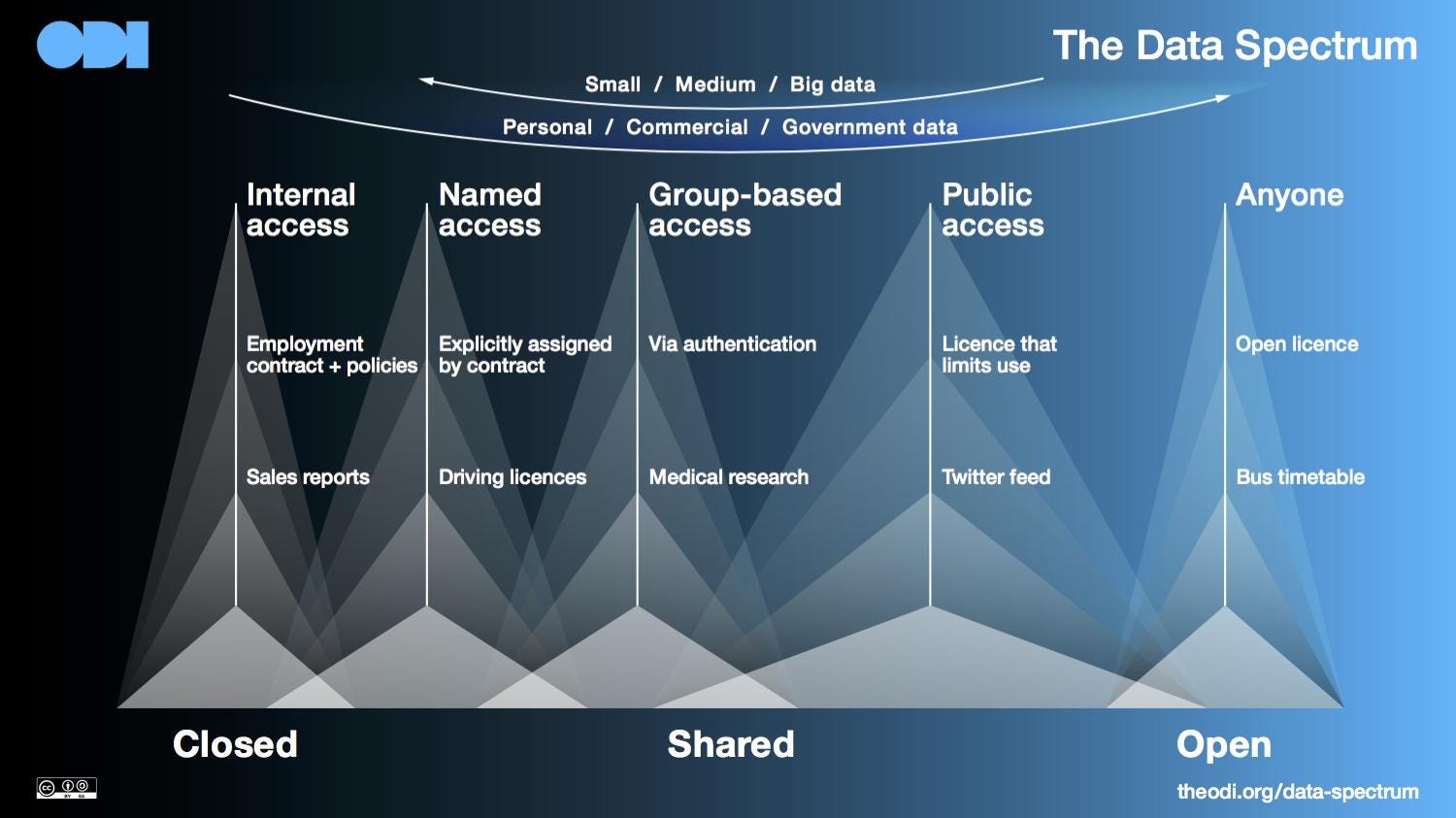

With nearly US$250 billion of governance fines worldwide since 2008, it’s no surprise most organisations fear data sharing, and as a result, 68% of enterprise data goes unexploited. In fact, most controlled data can in principle be shared and used for more effective decision-making – but only with the right people and for the right purpose.

Data sharing needs technical enforcement

Most organisations try to answer this need with draconian data sharing agreements or contracts. These agreements are expensive and time-consuming to set up – it’s common for enterprises like national governments or financial institutions to spend many months working through data governance to even share data between internal teams.

What’s worse is these agreements don’t work – most data sharing agreements are completely unenforceable and only act as a false sense of security. Once data crosses a trust boundary, it’s only soft mechanisms (e.g. trust in people to honour agreements) that prevent misuse. What people actually do with shared data is invisible and difficult to police.

“Having a contract or agreement between data providers and data users has frequently been shown to be insufficient.

In the Cambridge Analytica scandal, the contract terms were completely ignored and personal data was misused.

The lack of any hard, technical evidence may deprive courts of useful information and has made it difficult for regulators, politicians, journalists and the general public to understand what happened.”

– Putting the trust in data trusts, Register Dynamics, 2019

Clearly, what is needed is a new approach to reusing data across trust boundaries: one where analysts get simple, controlled access to data without data owners being at risk of regulatory fines and damaging headlines.

Bacalhau makes data sharing visible and auditable

At Bacalhau, we believe data-local computation is the answer to data governance woes. By keeping data in place and allowing authorised, audited, and controlled computation over it, more data can be used with less risk of misuse.

What’s more, because Bacalhau is a distributed computation platform, it doesn’t require moving data into a central store. Data can stay wherever in the organisation it should live, avoiding difficult organisational change and without any removal of control from data owners.

Today, we’re proud to announce these new job and data moderation features as part of Bacalhau 1.0. Now using Bacalhau data owners can control the who, what, where, why, and how of computation over their private data.

Bacalhau moderates both code and outputs

Bacalhau takes a two-step approach to job moderation. Firstly, data owners get an opportunity to check what the job is going to do complies with their policy. This pre-moderation stage happens before a job starts running and allows moderators to approve or reject the computation based on what data is going to be used, who is requesting the job, and what code will be executed against it.

Whilst humans are always in control, not every decision needs to be made manually. The pre-moderation process is highly flexible and can be as automated as desired. Data owners can set policies that inspect deeply what computation will be run, control different policies for different people, and call out to sophisticated algorithms that analyse safety and risk. When a job is not fit for automatic approval, the decision can fall back to a human for the final say.

On approval, Bacalhau will send the job to an appropriate executor where it only has access to the requested data and is securely isolated from the host system. Bacalhau applies resource limits on the job so processing power and memory usage are kept under control.

Whilst pre-moderation provides a sensible first line of trust, we know that deciding what a computer program will do without running it can be a difficult problem in general and one which requires technical skills. We’ve learned from the UK’s Office for National Statistics and other controlled research environments that have been safely allowing controlled access to data for decades and borrowed their practices for use in the digital domain. So as well as pre-execution control, Bacalhau allows post-execution moderation of results before they’re released to the job submitter.

When Bacalhau finishes a computation, it saves the results off to a private pre-release area. Moderators are then able to examine the results in the context of the job – are these results what this job was expected to produce? If the moderator deems the content to be appropriate to share, the results can be downloaded. What’s more, access to the private storage area is kept locked tight as users instead stream the results only for their job via Bacalhau’s download feature.

As with pre-moderation, a full suite of sophisticated analysis can be carried out on the results. Using Amplify technology, data owners can automatically detect personally identifiable information (PII), summarise tabular data such as CSVs, and analyse content in images and video clips. Generated metadata can either be used to automatically release results or to provide valuable input into human decision-making.

Moderation unlocks new federated learning

Computing over data separated by trust boundaries unlocks lots of data sharing for which there is no safe technical solution today. Organisations that hold data which could provide mutual value when shared more widely can now apply Bacalhau job moderation and open up access to their data without complex data governance.

For example, a university could make more of its data available to citizen scientists or external researchers, a government department could allow another department to analyse its data, or one team in a highly regulated financial institution could allow another team to derive insight from their data. In all of these cases, it’s important that the raw data isn’t released to lower trust users. Bacalhau ensures users get their analysis and nothing more.

Our same model of distributed moderated compute also unlocks federated learning between actors in different organisations. Using Bacalhau, independent organisations can derive insight from their aggregated data without it ever needing to be shared. Using federated learning techniques, data scientists can now train a machine learning or AI model on datasets in many different independent or even competing organisations without those organisations losing control of their data and with them being able to see exactly what is done with it.

For example, a central government body responsible for setting wide policy could draw on the data held locally in devolved organisations. Similarly, an industry body such as an insurance regulator could train a model by submitting federated learning Bacalhau jobs to all of its member insurers.

If the data was to be pooled in one place, there would be a strong economic incentive to sell or misuse the valuable aggregate data, but with it kept in place each individual insurer can be confident their data has only been used for the agreed mutually beneficial purpose.

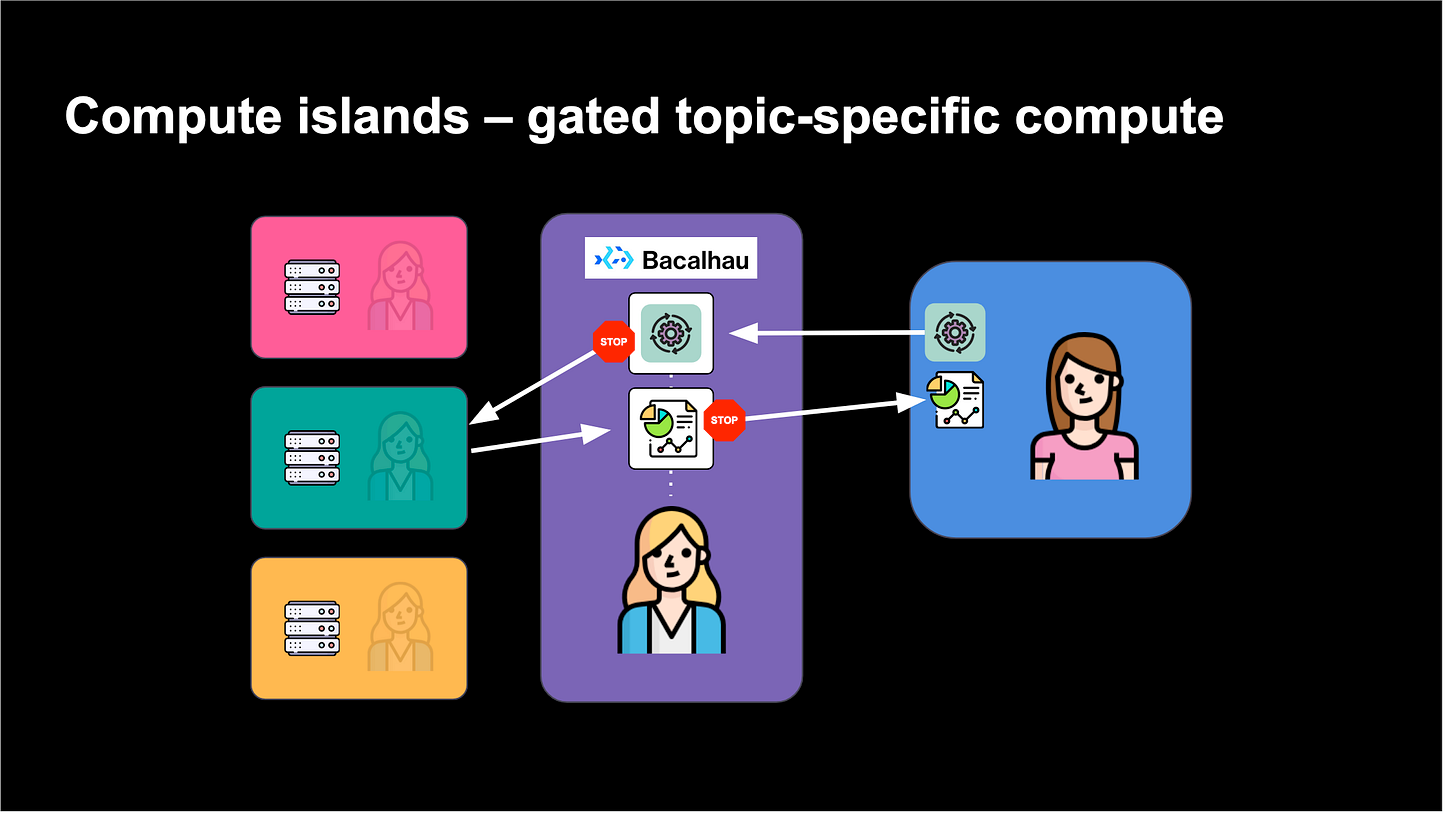

Compute islands for topic-specific analysis

And finally, finer-grained control over job execution provided by Bacalhau now enables moderators to act as gateways into compute islands. In this structure, independent compute providers and data owners interested in providing their resources for a specific purpose can delegate job authorisation to a trusted moderator.

For example, a collaboration of scientists who’ve individually collected healthcare data that can help cure cancer can make their data and compute available by putting their trust in an external moderator. The moderator will only accept jobs to an agreed policy – in this case, only allowing jobs that contribute to new cancer treatments.

In this way, the scientists can contribute to a wider beneficial goal without the overhead of dealing with external access requests, which they delegate to the moderator. With Bacalhau’s strong audit log, the scientists can verify at a later date that the moderator has acted according to the agreed policy.

Bacalhau is the future of data sharing

We’re very excited about the release of job and data moderation features in Bacalhau 1.0! We’re confident compute over data represents a new way of thinking about data sharing – in short, keep data safe by not sharing it at all!

We’re already working with companies and governments who are recognising the potential offered by moderated computation across trust boundaries. If you’d like to learn more about how these features can work for you, join us on Bacalhau Slack or get in touch with us directly for a conversation.