Setting up a Bacalhau Cluster - Tips and Best Practices

A walk through creating your very own Bacalhau cluster quickly, with tips and best practices for securing and managing the cluster.

Bacalhau utilizes peer-to-peer networks of nodes to enable decentralized communication between compute instances. While the demo network is public, you can easily set up a private network that will be isolated and enable you to execute jobs and tasks without using public nodes or sharing your data outside your organization.

In this article, you will explore

Setting up your own Bacalhau Cluster

Securing your cluster from unauthorized access

Advanced cluster options such as storage and publishing options, managing nodes and your cluster network

Best practices for your production use cases

Understanding Bacalhau Clusters

Your Bacalhau Cluster is a network made up of two essential node types: Requester Nodes and Compute Nodes.

The Requester node oversees user requests, manages the nodes in your network, and manages the jobs you send. It receives incoming requests and assigns requested jobs to the compute nodes. It is responsible for discovering compute nodes within your network and monitoring the lifecycle of your jobs.

The Compute node is responsible for executing jobs and publishing the job results to predefined destinations. It independently evaluates incoming jobs and determines if it can execute job requests.

The cluster also utilizes an interface consisting of the transport protocol, executors, storage sources, and publishers, which ensures efficient connection and execution between nodes in your cluster.

Step 1 - Deploying a Bacalhau Cluster (Quick Start)

You can quickly spin up a private Bacalhau cluster that will be isolated from any public network, allowing you to execute private jobs and process sensitive data from your cloud and on-premise host. To do this, follow these steps:

Install the Bacalhau service on every host instance you want in your cluster. In-depth installation steps are available in the Bacalhau docs.

Execute the following bacalhau command on one host instance.bacalhau serve --node-type requesterThis instance will serve as the requester node for your private network, actively listening for incoming connections. Check the public IP for your instance, which would be the address for all requests to the requester node. The method for obtaining your public IP address may vary depending on the type of instance you're using. Windows and Linux instances can be queried for their public IP using the following command:

curl https://api.ipify.orgIf you are using a cloud deployment, you can find your public IP through their console, e.g. AWS and Google Cloud

Create a compute node to execute jobs on and connect it to the requester node. Within your compute-specific instance, install docker and run the following command to add a compute node to your network.

bacalhau serve --node-type=compute --orchestrators=<Public-IP-of-Requester-Node>Check access to your network, exploring the nodes within your cluster with this command

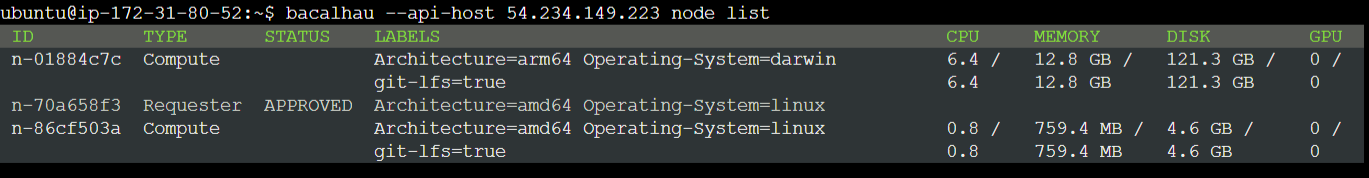

bacalhau --api-host <Public-IP-of-Requester-Node> node list

With this, a basic cluster of at least one requester node and one compute node has been set up. You can execute Bacalhau jobs normally on this cluster, isolating your data from public access.

Step 2 - Secure Your Network

Your Bacalhau cluster is flexible, allowing for several options to implement security across your network with additional Authentication and Authorization features added in v1.3.0. There is no default authentication and authorization policy within your cluster, and you can implement any policy through a structured Rego file.

Note: Rego is a declarative policy language adopted by the Cloud Native Computing Foundation and used by Kubernetes and Envoy for defining access control systems

Bacalhau has a repository of ready-made authentication and authorization policies.

Here’s an example of how to set your authentication policy within your cluster, using the ready-made secret/token policy

curl -sL https://raw.githubusercontent.com/bacalhau-project/bacalhau/main/pkg/authn/ask/ask_ns_secret.rego

bacalhau config set Auth.Methods '\{Method: token, Policy: \{Type: ask, PolicyPath: ~/.bacalhau/ask_ns_secret.rego\}\}'Apart from your authentication and authorization security methods, Bacalhau also offers TLS certification, to enable secure and encrypted communication within your network. You can use the following commands on your requester and compute nodes to set auto TLS via LetsEncrypt.

Note: It is assumed you have a definite public IP for your requester node and that both nodes have open 443 ports.

#On your requester node. Encryption will be enabled on restart

bacalhau config set Node.ServerAPI.TLS.AutoCert bacalhau.tech

bacalhau config set Node.ServerAPI.Port 443

# On your compute node

bacalhau config set Node.ClientAPI.ClientTLS.UseTLS true

bacalhau config set Node.ClientAPI.Port 443You can also set up self-signed TLS certification on Bacalhau.

Step 3 - Setting up Publishers

By default, your job results are published and stored locally on your compute nodes. This behavior is primarily intended for testing purposes, as accessing the results requires the client to be on the same network as the compute node. For your production use cases, it is recommended that you utilize other Bacalhau-supported destinations, including S3-compatible services, which isn’t limited to AWS S3, but also other storage options such as Google Cloud Storage, Azure Blob Storage, and open-source options like MinIO.

You have set access to your publisher on your compute node. You can do this by setting environment variables such as AWS_ACCESS_KEY_ID and AWS_SECRET_ACCESS_KEY, populating a credentials file to be located on your compute node, i.e. ~/.aws/credentials, or creating an IAM role for your compute nodes if you are utilizing cloud instances.

Your chosen publisher can be set for your Bacalhau compute nodes declaratively or imperatively using configuration yaml file:

Publisher:

Type: "s3"

Params:

Bucket: "my-task-results"

Key: "task123/result.tar.gz"

Endpoint: "https://s3.us-west-2.amazonaws.com"Or within your job execution commands

bacalhau docker run -p s3://bucket/key,opt=endpoint=http://s3.example.com,opt=region=us-east-1 ubuntu …S3 compatible publishers can also be used as input sources for your jobs, with a similar configuration.

InputSources:

- Source:

Type: "s3"

Params:

Bucket: "my-bucket"

Key: "data/"

Endpoint: "https://storage.googleapis.com"

- Target: "/data"Advanced Cluster Tips

The Bacalhau CLI commands allow for several flags and additional information to add granularity to your cluster network. Let’s go through a few of them.

Bacalhau instance configuration can be uniquely set for your different kinds of compute instances using the “bacalhau config auto-resources” command. This command automatically sets compute resource values in your environment that fit your machine's hardware resources, ensuring your Bacalhau requests efficiently run within your node’s hardware limits.

By default, your Bacalhau cluster nodes are assigned random names. However, you can set names and labels for your nodes with the -- name and --labels flags. This enables better organization and resource management. You can use node labels to filter and select specific nodes.

For example, you can create and label a specific compute node

bacalhau serve --node-type=compute --orchestrators=nats://18.207.213.68:4222 --labels key1=computeleadThen, use that label to specify the node your requested job should use

bacalhau docker run ubuntu echo Hello world –selector key1=computelead

Bacalhau uses NATS for inter-node communication, where the requester nodes act as a NATS server and listen on port 4222 by default. You can change the port to listen to using --network-port flag.

You can configure your compute nodes to add filters and more precision in the kind of job requests they accept. Set a job selection policy to accept only jobs with available data attached or use an external program to qualify your jobs, allowing for automation and conditional executions.

Best Practices for Production Use Cases

Your private cluster can be quickly set up for testing packaged jobs and tweaking data processing pipelines. However, when using a private cluster in production, here are a few considerations to note.

Ensure you are running the Bacalhau process from a dedicated system user with limited permissions. This enhances security and reduces the risk of unauthorized access to critical system resources. If you are using an orchestrator such as Terraform, utilize a service file to manage the Bacalhau process, ensuring the correct user is specified and consistently used. Here’s a sample service file

Create an authentication file for your clients. A dedicated authentication file or policy can ease the process of maintaining secure data transmission within your network. With this, clients can authenticate themselves, and you can limit the Bacalhau API endpoints unauthorized users have access to.

Consistency is a key consideration when deploying decentralized tools such as Bacalhau. You can use an installation script to affix a specific version of Bacalhau or specify deployment actions, ensuring that each host instance has all the necessary resources for efficient operations.

Ensure separation of concerns in your cloud deployments by mounting the Bacalhau repository on a separate non-boot disk. This prevents instability on shutdown or restarts and improves performance within your host instances.

Conclusion

In this article, you have walked through the process of setting up a private Bacalhau cluster, offering you an isolated network for your sensitive jobs and confidential data. Additionally, you have gained insights into the helpful advanced customization options available with Bacalhau, such as node names and labels, networking parameters, and job filtering policies. You also explored some best practices for deploying your Bacalhau private cluster in production.

Whether you are working with sensitive data, running compute-intensive tasks, or simply want more control over your Bacalhau network, setting up a private cluster offers numerous advantages. With its decentralized architecture and flexibility, Bacalhau provides a powerful tool for executing complex workloads efficiently and securely, wherever your data resides.

If you’re interested in learning more about distributed computing with Bacalhau and how it can benefit your work, there are several ways to connect with us. Visit our website, sign up to our bi-weekly office hour, join our Slack or send us a message.

How to Get Involved

We're looking for help in various areas. If you're interested in helping, there are several ways to contribute. Please reach out to us at any of the following locations.

Commercial Support

While Bacalhau is open-source software, the Bacalhau binaries go through the security, verification, and signing build process lovingly crafted by Expanso. You can read more about the difference between open-source Bacalhau and commercially supported Bacalhau in our FAQ. If you would like to use our pre-built binaries and receive commercial support, please contact us!

Thanks for reading our blog post! Subscribe for free to receive new updates.