Reproducible pipelines, decentralised reputation and more: CoD Summit² day 2

All the highlights from the day 2 "Platform" track of the Compute-over-Data (CoD) Summit, held at the Hyatt Regency in Lisbon, 3rd November 2022

After all the inspiring talks on day 1 of the summit, there was a lot to talk about on day 2! The “Platform” track was an unconference-style track that covered topics Compute-Over-Data (CoD) platform developers were interested in. In the room were teams from CoD platforms like Bacalhau, Golem, Koii, CharityEngine, and many more.

Remembering the world with content-addressable jobs

Radosław Tereszczuk of Golem presented his ideas about making job executions in CoD environments content-addressable. In this scheme, every job has an identifier generated by hashing all code and input data that are required to run the job. Two jobs doing the same work over the same data have the same hash, even if they are running on different machines that have never communicated.

David Aronchick of Protocol Labs also presented his paper on this topic which refers to a job and all of its inputs as a “curried function” – i.e. a function that can be invoked with no further inputs.

If the execution of jobs is also deterministic, then each job hash also uniquely identifies a set of outputs as well because running the same code over the same data deterministically should result in the same output. This means that it’s possible to see where data output by one job is used as input to another, and how all of the jobs link together in a call tree.

Having hashable jobs is a precursor to three valuable qualities:

The job can be stored as an artifact that is entirely reproducible: if someone wants to run the same job later to reproduce the result, they can be confident that the result they get will be the same as the first time it was run. David’s paper shows this to be valuable for decentralized science where verifying results in scientific papers is often challenging.

Outputs from previous executions of the same job can be reused: if there’s a mechanism for previous job outputs to be discovered, those previous results can be used instead of running the same work again. Applied at the global scale, this technique is effectively a "global cache” for all computation!

Economic incentivization of useful data: using the call tree of jobs and their outputs, it would be possible to see how many jobs ultimately refer to a piece of data (either directly or via the output of another job). This opens the door to directly rewarding the creation and storage of provably useful data.

Hashable jobs are also easy to version. Matthew Blumberg from CharityEngine mentioned that having versioned jobs was another useful quality of any execution system.

It’s clear from the discussion that linked jobs are something that lots of CoD providers are thinking about and starting to implement. Having a common realization of this across CoD platforms is in everyone’s best interest. The more execution that is hashable and discoverable, the more opportunity there is for reusing past outputs and incentivizing useful data. If everyone structures and executes jobs in the same way, they can benefit from work happening on other platforms, for free.

To realize the benefits, the CoD community needs two things:

Standardization on a job spec (or “invocation spec”)

A discovery mechanism that can relate invocation specs to cached outputs

A standard invocation spec from the IPVM project

Brooklyn Zelenka of Fission presented a talk on a new project to realize exactly this vision, called the InterPlanetary Virtual Machine (IPVM). The goal is to put deterministic, verifiable execution of WebAssembly into every IPFS node, to support automatic codecs and general data transformation straight from IPFS.

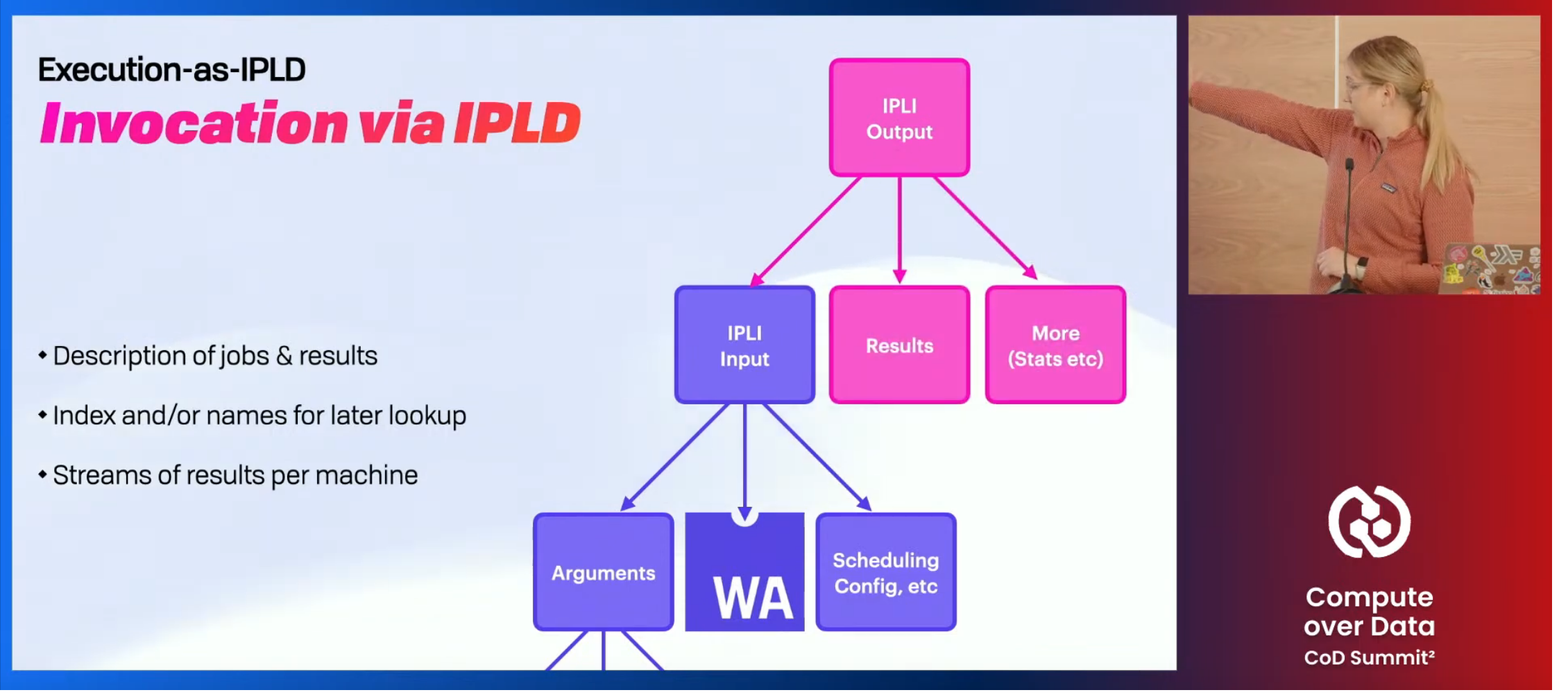

Included as part of the project is an invocation spec modeled as an InterPlanetary Linked Data (IPLD) document, which allows different content produced by different parties to be linked together. One document models the job code and its inputs and another model the outputs, linking back to the inputs and any side effects that were generated.

IPVM also is aiming at an "impure effect" concept that externalizes any non-deterministic operation as an effect that is executed outside of the execution environment, also referred to by Radoslaw as “services”. Effects don't have to be declared upfront; they can be decided while a job is running. This means that jobs can take different actions based on their input data.

Effects are signed by the node to prove that they were executed and can also rely on proactive capabilities given by the user. This system can also be used for handling privileged operations as part of a bigger job (e.g. only certain nodes may be able to send an email on behalf of a user so that the operation is modeled as an effect and only certain nodes can run it).

IPVM will also support payments via "state channels" that allow payment to be distributed dynamically amongst all the players that take part in executing a job.

A key question is how to make such a standardization effort successful and balance the forces between the collective agreement and individual innovation. Brooklyn explained that past experience with UCAN standards has shown that identifying the minimum needed for a useful standard and leaving holes and extension points is a valuable approach. She invited any parties interested in IPVM to sign up for their regular community calls.

Make past work discoverable with Octostore

Delegates also discussed the mechanism by which past job results could be archived and retrieved. Although the content produced by a job should be the same for every run, it’s not possible to guess the content hash of the resulting outputs without actually running the job, meaning we can’t just use IPFS for this. Instead, there needs to be a separate mapping from job spec inputs to outputs.

Luke Marsden of Bacalhau suggested that before we try and develop decentralized ways to do this, we should first prove the concept with a simple approach. He suggested building a centralized service that gives out API keys to interested parties, initially the members of the Compute over Data Working Group. David proposed “Octostore” as the name of this service.

The CoD Working Group community will be taking this work forward over the next few months – come and join us on Slack if you'd like to be involved!

Trusting results through reputation and incentives

One key assumption for reusing past results (or in fact, any results at all) is that they can be trusted. In a decentralized system without centralized enforcement of proper behaviour, users and job executors can lie with impunity. If a job executor can find a way to get paid without actually doing any work then the network will be flooded with bad executors and user trust in the network will disappear.

Almost every system of this type includes some idea of “auditing” – carrying out explicit checks that nodes in the network are behaving properly. In some cases, this is done by the user (manually), and in others, it’s built into the network.

Audits are strictly an overhead on top of useful work because they normally involve computing something that the user doesn’t really need, just to prove correctness. Successful networks maximize useful work (e.g. computing something for the first time) and minimize audits (e.g. computing something again to check it).

Two of the key levers in reducing the amount of auditing required are:

Reputation: having a network react to how a user or executor has behaved in the past. For example, an executor who has repeatedly fabricated results might no longer be selected for jobs.

Incentives: designing the economics of participating in the network to ensure that good behaviour is financially favourable and bad behaviour is not. For example, ideally, the amount of effort required to repeatedly lie would be more than just behaving properly.

There was a lot of discussion about how to design network protocols with these techniques and the different trade-offs between them.

A starting point for most reputation systems is to favour users with consistent past results so that a user with a better history is more commonly selected for jobs hence good behaviour is valuable in the long term. However, we were reminded that we want any reputation system to be resistant to recentralisation – so that big established players are not dominant and small players are able to enter the market and take part even with no reputation.

“Staking” was brought up as an alternative to reputation. In a staking system, people wanting to take part in the network (either as job submitters or as runners) have to put down a deposit that can be confiscated if they are found to be operating badly. This bypasses the need for reputation entirely as bad users have a direct financial implication for their behaviour. There was a suggestion by Levi Rybalov that staking is faster to bootstrap than running many audits and should improve the efficiency of a network.

Reputation and identity

Most reputation systems rely on having some persistent identity associated with executors, clients, and the users who run them. Otherwise, a user with a poor reputation can simply shuffle off their old identity and establish a new one with a clean slate. Establishing identities in a decentralized setting is a hard open problem being actively worked upon.

If identities can be established, then there are lots of techniques that can immediately help. David Aronchick highlighted that if we can establish people are unquestionably operating independently, then the number of positive results you need goes way down, as the chance they are colluding on forging results is minimal.

I pointed out that many real-world domains already have well-established reputation systems. For example, a professor is recognized as a trusted expert in their field, and if they publish a result a lot of weight is duly given to their opinion. If they fabricate results (or make a mistake), their reputation is at stake.

CoD platforms could look to simply piggyback on top of real identities as a (non-scalable) bootstrap mechanism. If a job executor is run by a recognized identity such as a university, their work can attract fewer audits because they have more to lose by acting improperly and the chance they are doing so is much lower.

The more decentralized version of reputation when everyone is anonymous instead relies on volume. The common analogy is with online reviews where thousands of reviews are generally a good signal over a few. These systems are still vulnerable to flooding attacks where bad users flood the system with false positives that seem independent (just like online reviews), and so these would need to be protected against.

Building configurable reputation and verification with Koii

Al Morris of Koii delivered a talk on how his team is solving these problems.

Al reminded us that different people have different risk profiles. In a Koii task, it is up to the task author to decide how much risk they are willing to take, only invite nodes to participate in the task that they trust, and craft verification that mitigates that risk. This externalizes the problem of trust and verification but introduces a new problem of how to aggregate node reputation across different types of tasks.

The other benefit of this approach is that each type of job requires a different type of auditing. For example, scraping web data might use a probabilistic analysis whereas computing something over a large data set in a deterministic way can be audited by comparing outputs.

Al also introduced the Compute, Attention, and Reputation Protocol (CARP) which Koii is using to incentivize correct behaviour in their network. CARP is a mechanism of stakes and reputation pools that can provide observable auditing for Compute over Data systems.

The way that CARP handles badly-behaved users is to employ “reputation slashing”, aggressively eroding the reputation when users are seen doing something bad.

Al also said that being able to turn the network into a self-defensive structure that will take down aggressive nodes (e.g. via denial-of-service attacks) would be valuable – essentially having the rest of the network act as an immune system against bad actors.

Reputation requires shared results and identities

There was general agreement that sharing results and identities were a primitive piece of infrastructure needed to build a wide array of different reputation systems. The CoD Working Group agreed to proceed with the Octostore proposal – to build a service to make compute results discoverable across networks. With that dataset in place, networks can develop reputation systems on top.

A number of different groups wanted to collaborate on this, so the CoD Working Group community will be taking this work forward over the next few months – come and join us on Slack if you'd like to be involved!