Implementation: Edge-Based Inference Machine Learning.

How to setup distributed ML inference with Bacalhau. (7 min)

This is the second part of ML Inference on Bacalhau. If you missed the first part, you can catch up here. Having explored the fundamental concepts and strategic advantages, we now transition from theory to practice. This section will provide you with a step-by-step walkthrough of the implementation process, starting from hardware provisioning and software installation to running ML inference with Bacalhau. Whether you're a seasoned developer or a beginner in edge computing, this practical guide is designed to help you navigate the process with ease.

What is in this Post

You’ll be successfully using Bacalhau to execute ML Inference using YOLOv5! Here's a snapshot of the steps:

Hardware Provisioning: Set the stage with the Control Plane Node and Compute Node(s), ensuring that they are suitably equipped and communicative.

Software Installation: Gear up the hardware with Bacalhau.

Configuration: A swift setup of the Control Plane and Compute Nodes.

Data Preparation: The selection and placement of videos.

ML Inference: Unleash the power of YOLOv5 on the Bacalhau network, showcasing the platform's versatility and capability.

Step 0 - Loading Videos onto Compute Nodes for Inference

Collect the video files earmarked for inference and distribute them across your compute nodes, placing at least 1 video into the /videos directory. For a dynamic use case, consider using distinct videos on each node. Remember, this procedure emulates real-time video capture from a camera. The choice of videos, however, remains at your discretion.

You can find the sample videos we’ll use for this demo here. There are a total of 12 videos. We will place 6 videos on one compute node and 6 videos on another. We'll harness the power of YOLOv5 as our preferred inference model:

“YOLOv5, the fifth iteration of the revolutionary "You Only Look Once" object detection model, is designed to deliver high-speed, high-accuracy results in real-time.”

*Source: https://docs.ultralytics.com/yolov5/*

While YOLOv5 is our model of choice for this exercise, Bacalhau's modular architecture ensures flexibility. This means you can easily substitute another model that aligns more closely with your objectives. Bacalhau is crafted to be adaptable, positioning you with a foundational template to streamline subsequent deployments tailored to your use cases.

💡 For this guide we will use 2 compute instances, but feel free to use as many as you’d like.💡

Step 1 - Provisioning Hardware

Before we embark on our journey, let's ensure we have the right tools:

Control Plane Node: Your central command center. Through this, you'll orchestrate tasks across the Bacalhau cluster.

Recommended Requirements:

Number of Instances: 1

Disk: 25-100GB

CPU: 4-8vCPU

Memory: 8-16GB

Tip: You could even use the device you're on right now, provided it's not a mobile device.

Compute Node(s): Think of these as your workers. They'll be doing the heavy lifting on behalf of the control plane.

Recommended Requirements:

Number of Instances: 1-N

For our use case it will be best to have at least 3

Disk: 32-500GB

CPU: 1-32vCPU

Memory: 8-64GB

GPU/TPU: 1 (Optional for performance of ML Inference)

Note: Ensure the Control Plane can communicate with these nodes. Tailscale offers a handy solution for this.

Hardware check done? Let's proceed to software installation

Step 2 - Install Bacalhau

With the hardware set, it's Bacalhau time. While there's an in-depth installation guide, here's the essence:

Access each node (ssh, putty, caress its keyboard)

Issue the installation command:

curl -sL <https://get.bacalhau.org/install.sh> | bash

That's it for installation. Simple, right?

Step 3 - Configure Control Plane Instance

Time to get the Bacalhau control plane running (also known as the Requester). For those craving details, here's the comprehensive guide. For a swift setup:

Launch the Bacalhau Control Plane Process:

bacalhau serve --node-type=requester. To use this requester node from the client, run the following commands in your shell.

export BACALHAU_NODE_IPFS_SWARMADDRESSES=/ip4/127.0.0.1/tcp/55561/p2p/QmSX2UunnZ4ethswdzLjCD6VpHwrfrTSLHS3MVQKf1DUNe

export BACALHAU_NODE_CLIENTAPI_HOST=0.0.0.0

export BACALHAU_NODE_CLIENTAPI_PORT=1234

export BACALHAU_NODE_LIBP2P_PEERCONNECT=/ip4/100.99.22.51/tcp/1235/p2p/QmYKJnwBJDn77RUrXw3cVj8oAtYQLuHncTybkpS94aC8i1To interact with this network:

Locally: Export the commands as provided.

Remotely: Adjust accordingly, e.g:

export BACALHAU_NODE_CLIENTAPI_HOST=<public_ip_of_requester_instance>Step 4 - Configure Compute Instances

Next we’ll get the Bacalhau compute nodes running. Again, for details here's the comprehensive guide. For a quick setup:

Install docker on each compute node. Ensuring the docker process is accessible by the user running Bacalhau in the commands to follow.

Optional: fetch the docker image ultralytics/yolov5:v7.0 for faster subsequent steps. We will be using this model for our inference.

To fetch the image:

docker pull ultralytics/yolov5:v7.0

Create a directory on each compute node for video files. Files within this directory will simulate captured videos from security cameras; we will be performing ML inference over their contents. In this example we will use the the directory /videos.

Launch the Bacalhau Compute Node Process:

bacalhau serve —node-type=compute --allow-listed-local-paths=/videos --peer=<multiaddress_of_requester_node>Here is a brief explanation of each of these flags. Comprehensive descriptions may be found by running bacalhau serve --help.

—node-type=compute instructs Bacalhau to run as a compute node

--allow-listed-local-paths=/videos allows the node to access the contents of /videos when executing workloads

--peer=<multiaddress_of_requester_node> instructs the compute node to connect to the requester node we setup in the previous step. As an example, borrowing from the previous step, this would be--peer=/ip4/100.99.22.51/tcp/1235 but you will need to provide a value here specific to your deployment.

Step 5 - Running Inference on Videos with YOLOv5

To process videos with ML inference, we'll guide the Control Plane (or the Requester) to instruct each compute node to work on video files in their local directories.

Step 5.1 - Bacalhau ML Inference Command:

bacalhau docker run --target=all \

--input file:///video_dir:/videos \

docker.io/bacalhauproject/yolov5-7.0:v1.0Diving deep into the command, here's a comprehensive breakdown of each component:

Step 5.2 - Bacalhau Arguments:

bacalhau docker run --target=all: This orders Bacalhau to execute a Docker job, targeting all nodes the Control Plane has established a connection with.--input file:///video_dir:/videos: This synchronizes the compute node's URI value to the container's /video path. (Refresher: We earmarked this directory in our earlier segment on "Configure Compute Instances")

Step 5.3 - Yolov5 Arguments:

Inside the Dockerfile, we pass a set of default arguments to the YOLOv5 detection script, you can view the Dockerfile we are using at this link. As an example, if you would prefer to use the XL model from YOLO you can modify the above command as shown below, overriding the default model used in the Dockerfile:

bacalhau docker run --target=all \

--input file:///video_dir:/videos \

--input https://github.com/ultralytics/yolov5/releases/download/v6.2/yolov5x.pt:/model \

docker.io/bacalhauproject/yolov5-7.0:v1.1 \

--env=WEIGHTS_PATH=/model/yolov5x.pt \ Once this command is executed, each compute node will start processing its local videos.

Step 6 - Interpreting the Results:

Upon issuing the above command your Bacalhau network will begin processing the ML Inference Job. You will be presented with a terminal prompt like the below (although your JobID will be different):

Job successfully submitted. Job ID: 4b1422ff-7fc3-4518-8925-c2e5d4902a04

Checking job status... (Enter Ctrl+C to exit at any time, your job will continue running):

Communicating with the network ................ done ✅ 0.2s

Creating job for submission ................ 3.2sYour prompt will stay active until the job finishes. The completion time varies based on the video size relative to the resources of your compute nodes.

After your job is done, fetch the inference results using the bacalhau get command. Remember to replace it with your unique jobID. The command seamlessly fetches results from all nodes, organizing them into individual directories for each compute node that took part in the ML inference.

$ bacalhau get --raw 4b1422ff-7fc3-4518-8925-c2e5d4902a04

Fetching results of job '4b1422ff-7fc3-4518-8925-c2e5d4902a04'...

19:26:06.521 | INF pkg/downloader/download.go:76 > Downloading 2 results to: /Users/bacalhau/data.

Results for job '4b1422ff-7fc3-4518-8925-c2e5d4902a04' have been written to...

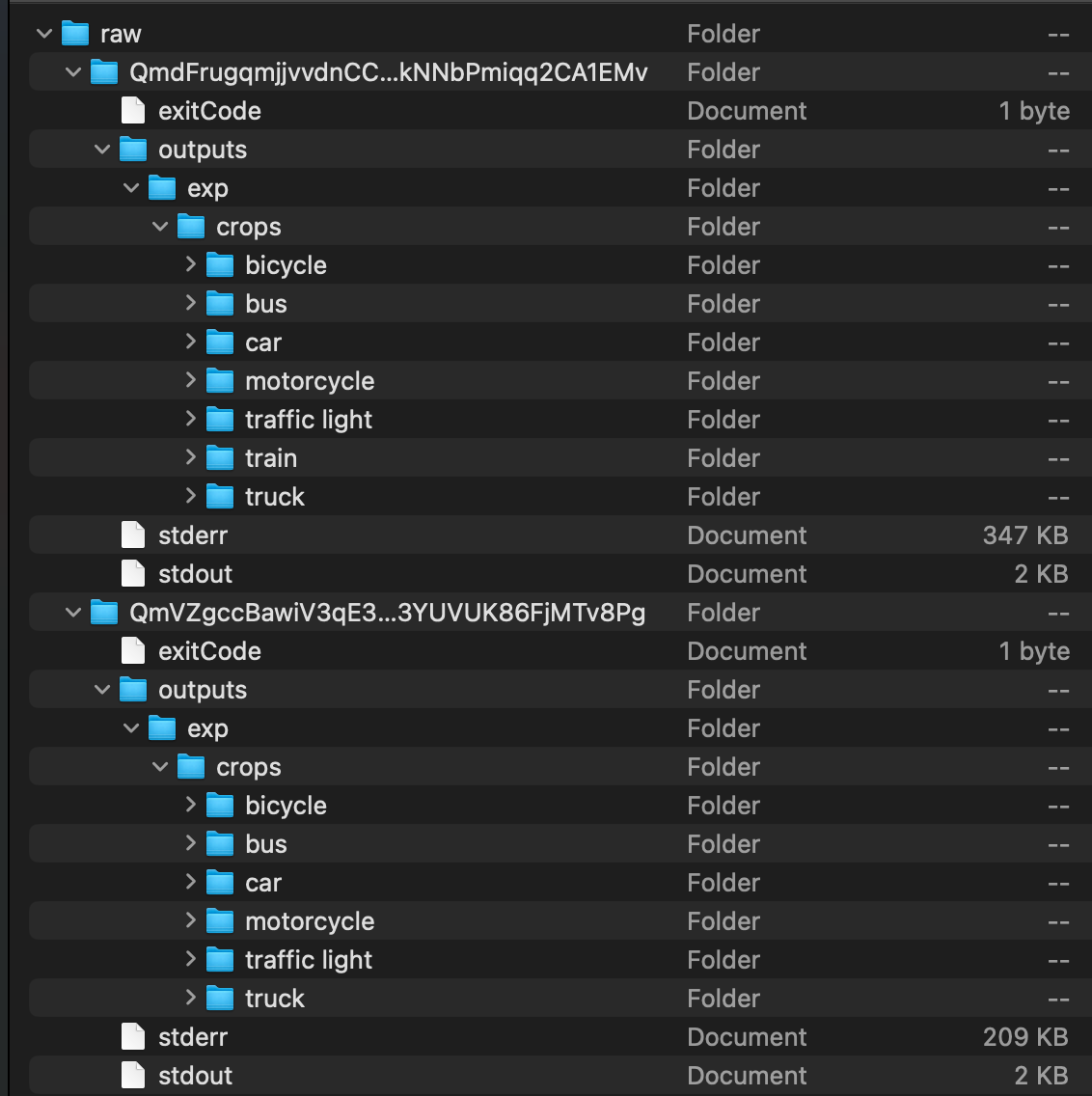

/Users/bacalhau/data/job-4b1422ffHere's a glimpse of the downloaded job's structure:

Our Bacalhau deployment for this task utilized 2 compute nodes, resulting in two distinct directories for each node's outputs. These directories are labeled with a Content Identifier (CID) – a unique hash of their content. To view the ML Inference results showcased in this example, visit this link.

Conclusion

In the digital transformation era, data's importance is unmatched, but its growth, especially at the edge, is outpacing traditional centralized processing. This leads to inefficiencies, security concerns, and higher costs.

The key issue is how and where data is processed. We're shifting from cloud-centric to distributed, edge-focused strategies, addressing data transit, security, and compliance challenges. This enables real-time data interaction via ML models right at the source.

Bacalhau is at the forefront of this shift. By decentralizing ML inference, we reduce costs and boost efficiency, resulting in faster, actionable insights and more reliable systems. This strategy combines innovative software with modern hardware solutions to meet today's data challenges.

The intersection of edge computing and machine learning is creating new opportunities for instant data insights. Our guide demystifies these areas, empowering organizations to make informed, timely decisions where it matters most. Right at the data's point of origin.

How to Get Involved

We're looking for help in several areas. If you're interested in helping, there are several ways to contribute. Please reach out to us at any of the following locations.

Commercial Support

While Bacalhau is open-source software, the Bacalhau binaries go through the security, verification, and signing build process lovingly crafted by Expanso. You can read more about the difference between open source Bacalhau and commercially supported Bacalhau in our FAQ. If you would like to use our pre-built binaries and receive commercial support, please contact us!