Freeing Trapped Data with Edge ML (Demo)

(03:38)

In today’s post, we’ll explore how Bacalhau can simplify the deployment and interaction with Edge ML infrastructures in just a few minutes. To demonstrate just how easy it can be, we’ve created a short video demo that walks you through the process step-by-step.

Building machine learning models has become more accessible than ever. However, deploying these models, especially outside of a data center, remains a significant challenge.

Devices at the network’s edge now possess incredible capabilities to gather data, execute models, and generate insights. Yet, this data often remains trapped on the devices where it is created. Challenges such as unreliable networks, unreasonable bandwidth fees, and the complexities of managing diverse devices have made it difficult to find a one-stop solution.

With Bacalhau, these obstacles quickly become far more manageable. When combined with Edge ML, Bacalhau allows you to manage and utilize the full potential of edge devices as if they were right next to you.

To prove it, we’ve put together a short video that showcases just how easy it is to deploy the latest and greatest AI at the edge.

The Video

In this short video, we will guide you through the process of creating a Bacalhau job that will deploy a machine learning model to each instance across your infrastructure. This job uses a Docker container with YoloV5 models - an industry-leading open source framework for image and video classification - to demonstrate the power and simplicity of this approach.

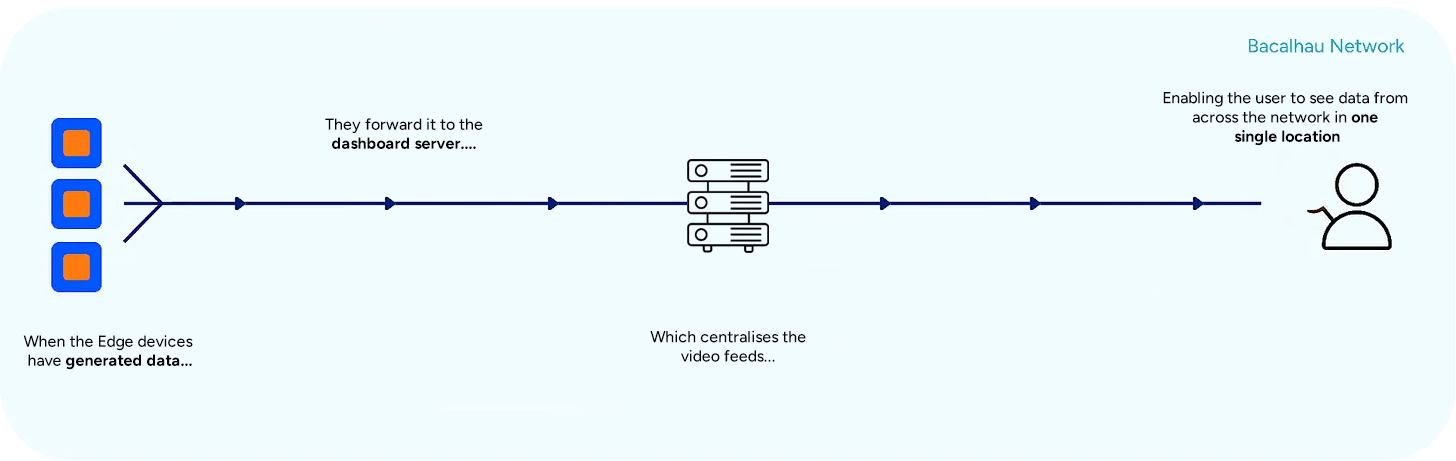

As the container starts, it will process the video source through YOLOv5. You’ll see classifications and coordinates appear on our dashboard, streamed directly from each edge instance. This setup allows you to monitor your entire Edge ML infrastructure and explore the data from a single, unified control plane.

How does it work?

This demo showcases a distributed Edge ML setup with several moving parts:

Private Bacalhau Cluster

Multiple Compute Nodes

A Single Requester Node

Docker Image with Application Code and YoloV5

Cloud Application Dashboard

At the heart of the demo is a private Bacalhau cluster, comprising multiple compute nodes connected to a single requester node. Bacalhau orchestrates the deployment and execution of machine learning jobs across these edge devices seamlessly.

Application Code and Containerization

The application code and ML models are built into a container image. When the Bacalhau job is scheduled, it first pulls the Docker image with all necessary code to the designated compute node. Once the job starts, it processes a video stream mapped from the compute node into the container’s storage volume as an `InputSource`. This allows the job to operate on edge data securely, without accessing the underlying filesystem beyond the specified directory.

Job Execution

Once the job starts, the video stream is analyzed frame-by-frame in real-time using yoloV5 and then stored for later use. This enables near real-time inference over media assets, enhancing our application’s performance and responsiveness.

Requester Node Functionality

In this demo, the requester node serves as the central interface for creating, distributing and observing the Edge ML jobs that we want to dispatch to our devices. If the AI job exceeds the capabilities of certain compute nodes, the requester node selects the appropriate hardware to meet the job’s requirements, ensuring optimal hardware utilization.

Cloud Application Dashboard

Our demo features a cloud application dashboard deployed with a fixed IP address. When the Bacalhau job is created, it receives the dashboard’s IP address as an environment variable, allowing it to forward inferences correctly. The dashboard, when accessed by a user, requests inferences from each compute node running the job via Websockets. It synchronizes with the current time index of the video being viewed and overlays the bounds and classifications of the inferences onto the video.

Conclusion

Implementing Edge ML with Bacalhau is both simple and straightforward. By leveraging industry-standard technologies like Docker, any developer can create distributed applications that maximize the potential of their infrastructure. Bacalhau enables the deployment of complex Edge ML setups without the need for custom toolchains or specialized applications, making advanced edge computing accessible and efficient for everyone.

How to Get Involved

We welcome your involvement in Bacalhau. There are many ways to contribute, and we’d love to hear from you. Please reach out to us at any of the following locations.

Commercial Support

While Bacalhau is open-source software, the Bacalhau binaries go through the security, verification, and signing build process lovingly crafted by Expanso. You can read more about the difference between open-source Bacalhau and commercially supported Bacalhau in our FAQ. If you would like to use our pre-built binaries and receive commercial support, please contact us!