Distributed ML with the Bacalhau Bluesky Bot

Using Machine Learning models has never been simpler!

A little while ago we released the Bacalhau Bluesky Bot (Bluesky Profile). We thought it would be a fun way to show people how simple it can be to integrate existing applications and services with a Bacalhau network, as well as give people the opportunity to fire off the odd job or two.

But the Bacalhau Bot can do way more than just run code and get the results back to people - we thought it would be a neat idea to show off how effortlessly Bacalhau can be used to run ML models wherever compute is available, and so that’s what we’ve done!

What does it do?

With the first version of the Bacalhau Bluesky Bot, you could run jobs by posting commands at it just as you would with the Bacalhau CLI. Just like:

@jobs.bacalhau.org job run <URL_TO_CONFIG.YAML_FILE>

We built it this way so people familiar with the CLI would know exactly what they could do with the Bot - and for those who’d never used the CLI, but wanted to get a sense of how to work with Bacalhau without spinning up a whole network.

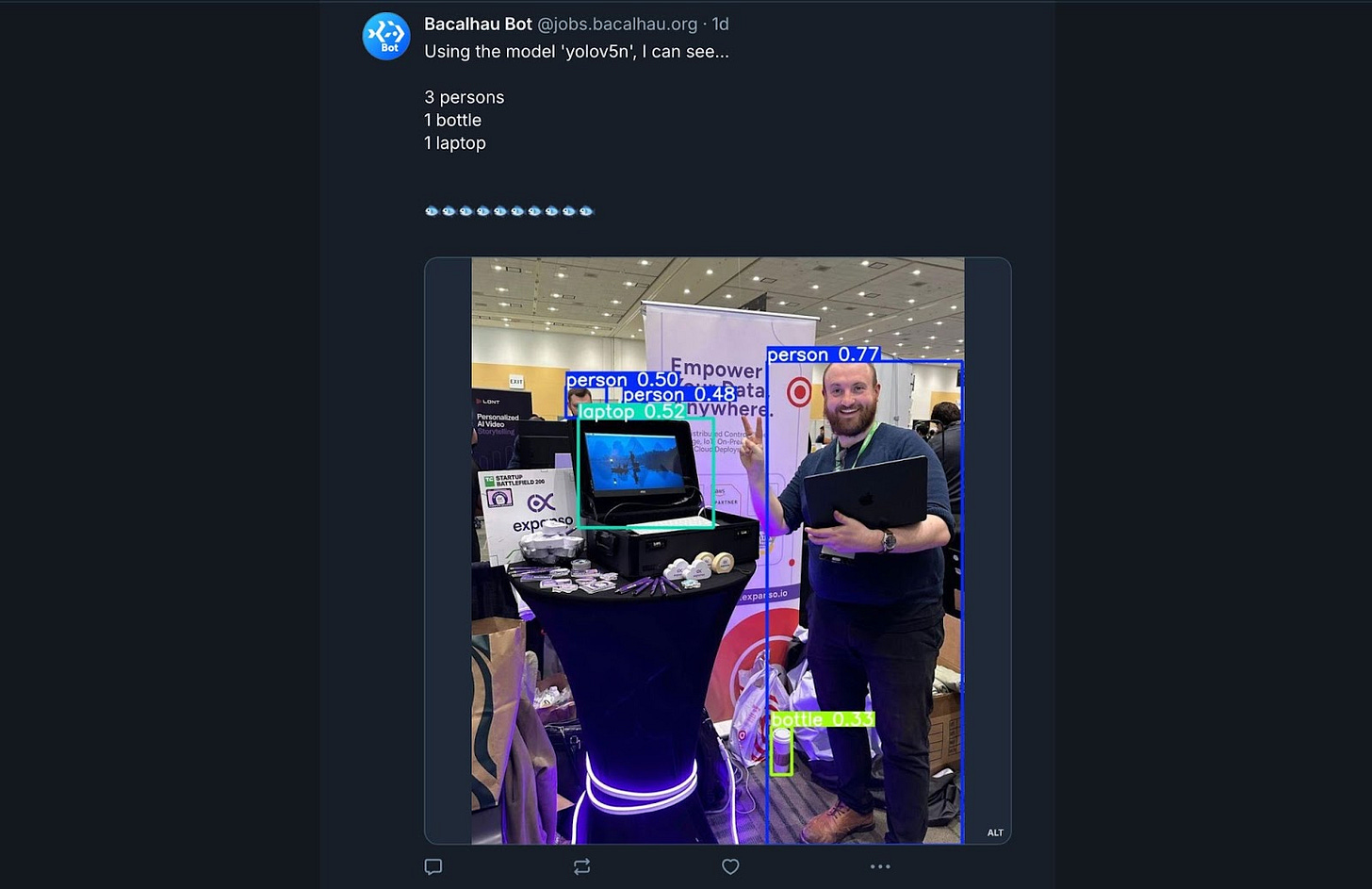

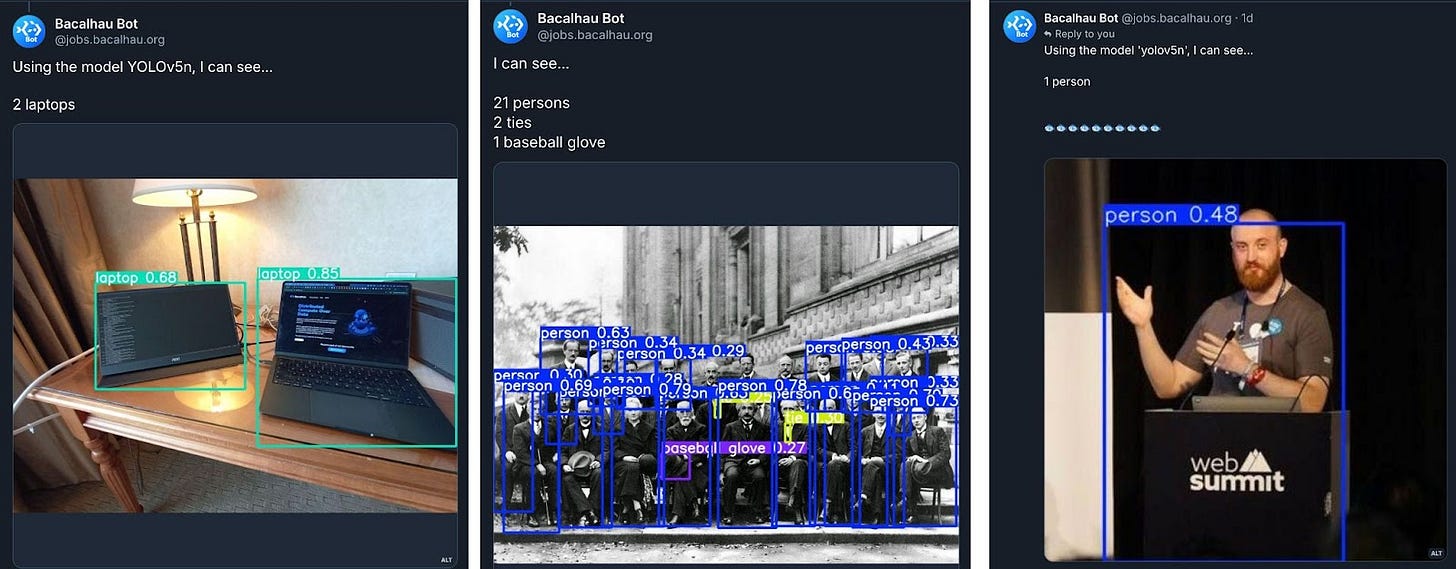

Now, we’re deviating a little bit from the CLI interface to show-off more specialised use-cases. As of today, you can classify any kind of image you like by posting it to the Bacalhau Bluesky Bot with an attached image in the post, and Bacalhau will use YOLO, detect what’s in there and post it back to you - all within 30 seconds!

How does it work?

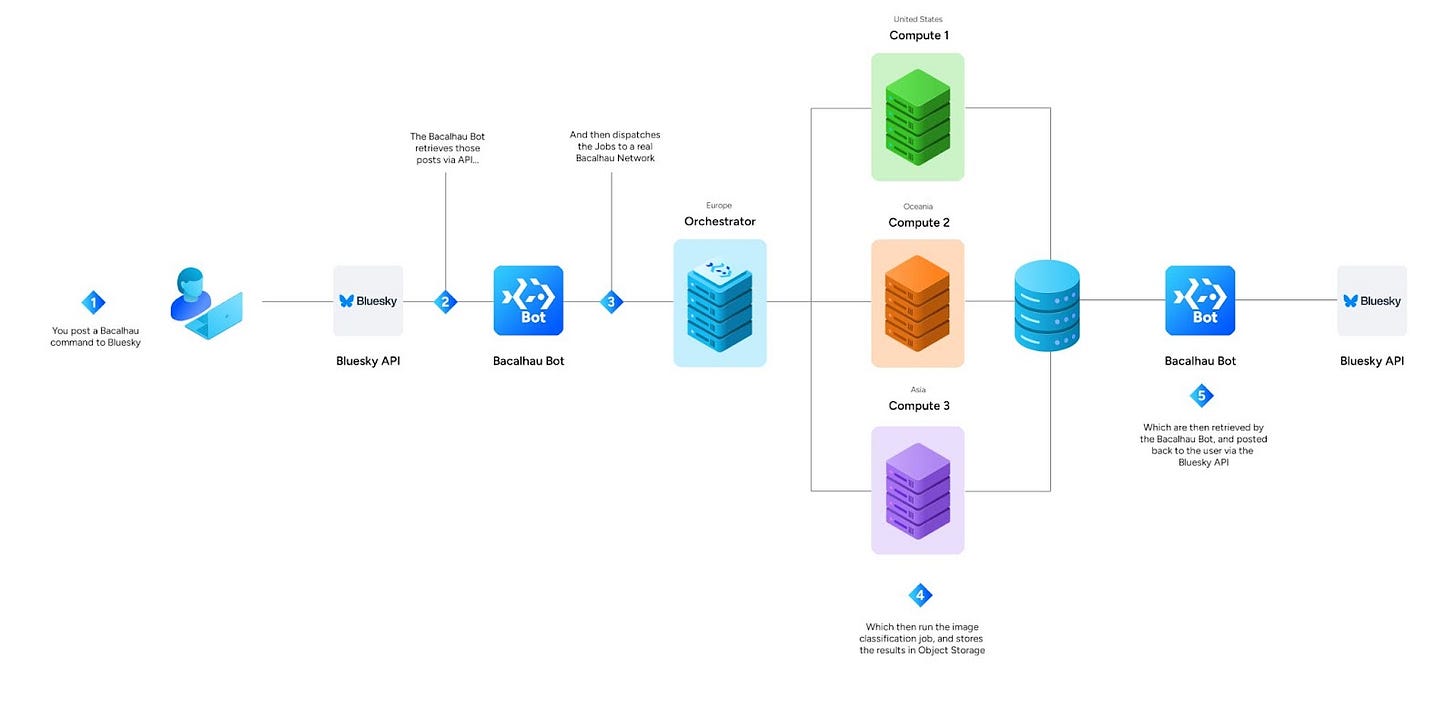

Not much has changed in how the Bacalhau Bluesky Bot handles your request, there’s just a little bit of extra code to handle a classification job and get you the results after they’ve executed.

When you post an image to the Bacalhau bot with:

@jobs.bacalhau.org classify

The Bacalhau Bot will read your post, get the URL for the image you’ve posted to it, and dispatch a Job to the Bacalhau Bot Network. This Bacalhau Network is no different from a regular Bacalhau Network, it’s just that it can only be interacted with through the Bacalhau Bluesky Bot.

The Bot loads up a template job.yaml file which specifies the container we want to use to run our inference job, and loads in some variables for the job to retrieve your image and run the classification over it.

Once it’s loaded up the YAML, it converts it to JSON and posts it to the Bacalhau orchestrator via API to set the job off and gets a Job ID in return.

The orchestrator then looks at the network and schedules the job to run on any available compute node.

The compute node that gets the job will use the environment variables we set with the job.yaml to grab the image you posted to Bluesky from the Bluesky CDN, and then use YOLO to classify all of the objects in the image.

This process takes about 10 seconds - even on a machine that doesn’t have a GPU. It’s a real testament to how far ML models have come in the last few years to run advanced inference on devices that might have struggled in the not-too-recent past.

Once the image has been classified, our code then draws all of the bounding boxes for the objects in the image to a new file, and sends that off to an object storage bucket along with some metadata for later retrieval by the bot, and then logs out a UUID that the Bot can use to grab those assets.

While all of this is going on, the Bacalhau Bluesky Bot keeps a track of the job, and after 30 seconds or so, will use the returned UUID to grab the newly classified image and metadata from the shared object storage bucket.

Once it’s got that, all the Bot has to do is use the Bluesky API to post a response with the metadata and annotated image in response to the original post, and Bob’s your uncle! You get a nice distributed compute-powered ML response in your Bluesky notifications!

Give it a go!

These changes are live right now! So go ahead and give them a whirl! All you have to do is head over to Bluesky with your account, and post…

@jobs.bacalhau.org classify

…with an attached image and we’ll get you your classifications in less than a minute!

Conclusion

We built the Bacalhau Bluesky Bot to show people how easy it can be to integrate products, apps, and platforms with Bacalhau. If you have an idea that you think would be cool to hook some distributed computing into, let us know! We always love to hear fun and innovative ideas that excite and drive distributed computing forward!

Get Involved!

We welcome your involvement in Bacalhau. There are many ways to contribute, and we’d love to hear from you. Please reach out to us at any of the following locations.

Commercial Support

While Bacalhau is open-source software, the Bacalhau binaries go through the security, verification, and signing build process lovingly crafted by Expanso. You can read more about the difference between open-source Bacalhau and commercially supported Bacalhau in our FAQ. If you would like to use our pre-built binaries and receive commercial support, please contact us!

Subscribed