Deploying Websites Everywhere(!) With Bacalhau

(05:12) Deploying websites across multiple servers doesn't need to be difficult. With Bacalhau, all it takes is a single CLI command.

In a few years, the World Wide Web will be 35 years old. I think most people will agree with me when I say that there are few technologies that have endured for that length of time and had the world-changing impact that the Web has had on, well, everything.

Today, there are over a billion websites online, available in seconds. With that kind of diversity, there are countless ways to deploy and manage assets on a web server. As web apps and infrastructures grow more complex, it becomes harder to ensure everything runs smoothly across your system.

Today, I’m going to show you how to use Bacalhau to deploy static assets to a group of nginx servers at the same time - without needing to know where those servers are, SSH in, or deal with the time-consuming tasks of managing multiple servers across the globe.

The Setup

For this demo, we’re going to have a couple of parts that we’re going to work with

A server running a Bacalhau requester node

Three servers running:

Bacalhau as compute nodes

An nginx web server configured to serve static content

Our Bacalhau Network

In this example, we’ve set up a few servers that join a private Bacalhau network. This gives us a point of access for each server in our network to distribute content to via Bacalhau.

For instructions on setting up your own Bacalhau network - either in existing infrastructure or from scratch, check out our docs!

nginx

To begin, each of our web servers is serving the default nginx page. It’s good to know they’re online, but it’s not very personalized. We’re going to create a Bacalhau job that reads an HTML file from our local system, packages it up, and uses Bacalhau’s containerless execution capabilities to write that HTML to each compute node in our network.

Once the job is finished, that HTML file will now be served by each web server - no logging in, no managing assets - just a single CLI command.

The Bacalhau Job

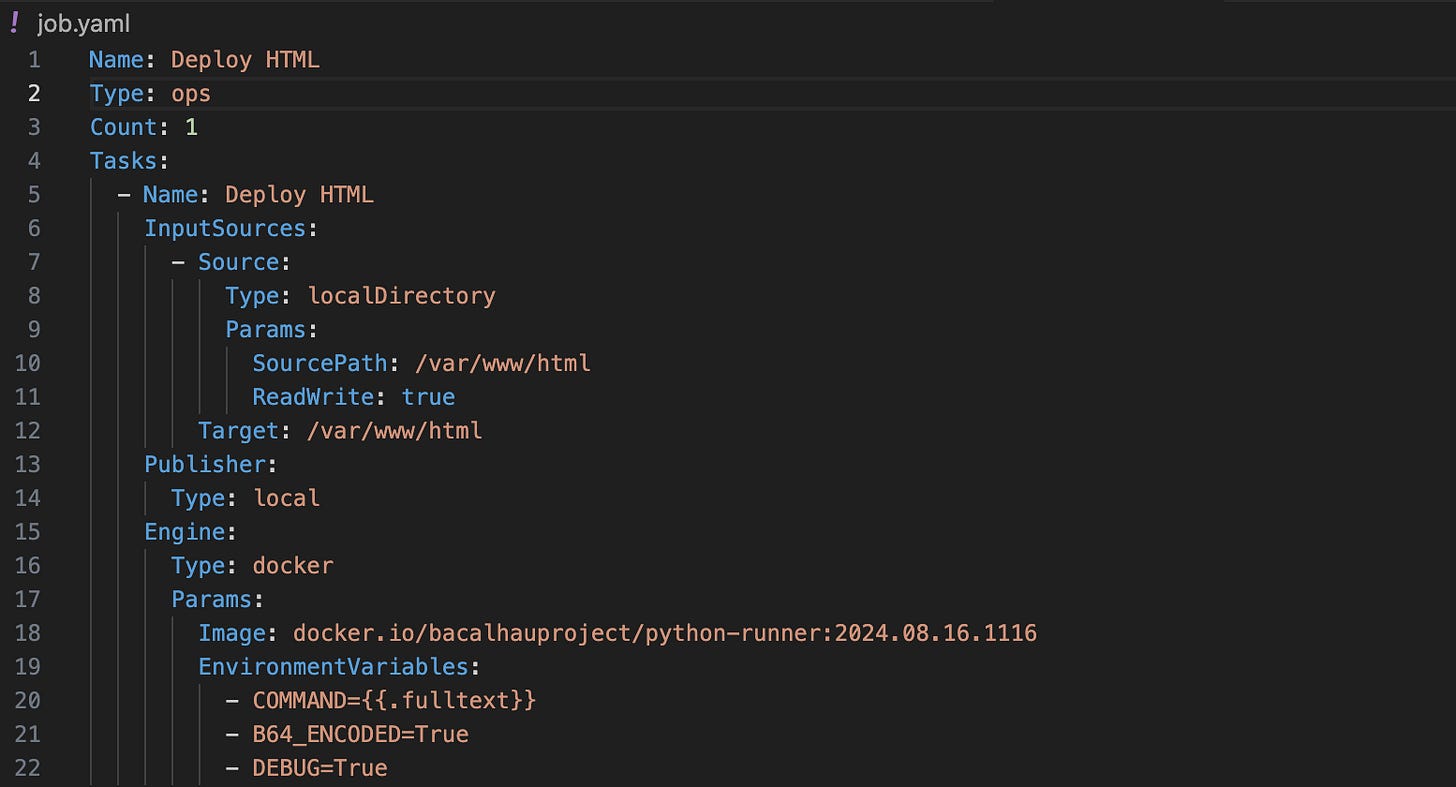

First up, we put together our job file that we’ll be sending to each compute node in our Bacalhau Network

There’s a couple of important things to note about this job file:

First, take note of how we use the localDirectory InputSource to allow our Bacalhau job to read and write files in a specific directory. One of Bacalhau’s best features is its ability to read and write arbitrary files on a remote system while keeping the rest of the system secure. Only the specified files are accessible, protecting the rest of the file system from unwanted access!

This provides a secure, standardized way to access specific directories on the remote systems where our compute nodes are running. In this case, we’re giving our Bacalhau job permission to read and write to the /var/www/html directory on each compute node, where nginx serves static files by default.

When we distribute our new HTML file, it will be written to /var/www/html/index.html, replacing the default nginx index page and serving our new content instead.

The second thing to note are the environment variables - specifically the COMMAND environment variable. Here, we’re passing through some content on the CLI when we submit our job to each node with the Bacalhau command. The command variable is going to be a Base64 encoded string which contains the HTML file we want to write to our nodes and the Python script which is going to do the persistence for us.

Let’s dive into that a little deeper…

Containerless Code Execution

What makes this process even simpler is a something we've developed to enhance Bacalhau: containerless Python execution.

This allows Python workloads to run directly, without the overhead of containers. It’s faster, more efficient, and streamlines the entire deployment process.

Depending on what you're building, when submitting jobs to a Bacalhau network, you're usually creating a containerized workload and sending it off for execution on a system within the network. But that’s still a lot of work just to run a simple Python script!

We’ve put together a pre-baked container image which can parse a Python script submitted as part of the job execution, and then run it on the nodes of your choice.

We love using this for quickly putting together scripts which can work over data on Bacalhau nodes, and in this instance, we’re using it to wrap up some HTML code in a Python script, encoding that in Base64, and then shipping it off to write that HTML to the system.

Shell Script

When you use Bacalhau to run containerless python scripts, you would normally do something like the following to Base64 encode everything before sending it off with the CLI:

bacalhau job run job.yaml --template-vars "fulltext=$(cat script.py | base64)"

That approach works well when your Python file is self-contained, but we want to bundle our HTML with the Python script so it can write the file to the compute nodes during execution. This adds a bit of complexity, but it’s easily managed with a little shell scripting magic to package everything together for seamless deployment.

In the previous command, we passed the Python file directly through the Base64 encoder. This time, we’ll use a shell script that reads the index.html file, injects it into a template for the Python script, Base64 encodes the new Python script, and then passes that to the Bacalhau CLI. This approach allows us to dynamically bundle the HTML file with the Python script for deployment across our compute nodes.

And it’s only 20 lines or so!

Deploying Everything, Everywhere, All at Once

So, now that we’ve looked at all of the component parts of the demo, it’s time to deploy our new page everywhere, all at the same time.

You may have noticed that in the job.yaml file, the job type is set to "ops". This means that once we send it to our Bacalhau network, it will run on every node that meets the job's requirements. In this case, we haven’t specified any minimum requirements, so the job will execute on every node in the network, writing our index.html file to each system.

So, if we run our job with the following command…

bacalhau job run job.yaml --template-vars "fulltext=$(./generate_script.sh)"

…we pass our Base64 encoded Python script with the embedded HTML page that we want to send to each node aannnnddddd…

… we get our brand new asset deployed across all of our servers simultaneously - with just a single command!

Conclusion

Web applications and services grow more complex by the day, but that doesn’t mean that managing them has to match that complexity.

With Bacalhau, you can have a single, unified point of access for deploying, managing and interacting with any kind of system.

To quote Turing “This is only a foretaste of what is to come, and only the shadow of what is going to be.” In this piece, we’ve shown you how to publish a single file to a handful of servers, but the exact same process can be applied to deploy any number of assets to any number of servers.

Next week, we’ll follow that line of thought by showing you how you can configure and manage TLS certificates across a fleet of servers with Bacalhau - again using just a handful of commands. You’ll never need to worry about your Let’s Encrypt certificates expiring again!

Get Involved!

We welcome your involvement in Bacalhau. There are many ways to contribute, and we’d love to hear from you. Please reach out to us at any of the following locations.

Commercial Support

While Bacalhau is open-source software, the Bacalhau binaries go through the security, verification, and signing build process lovingly crafted by Expanso. You can read more about the difference between open-source Bacalhau and commercially supported Bacalhau in our FAQ. If you would like to use our pre-built binaries and receive commercial support, please contact us!