Computing over Data with DeepSeek-R1

(05:08) DeepSeek-R1 has taken the world by storm - but what's the best way to get started, and make sure that the systems we use it with are secure?

Unless you’ve been living somewhere far and distant without internet access this last week (and let’s be honest, there’s not many places like that these days), you’ll undoubtedly have seen the world being taken by storm by something called DeepSeek-R1.

What’s all of the fuss about?

DeepSeek-R1 is an open-source, Large Language Model (LLM). It’s not the first open source LLM by any measure - but it’s one of the first open source “reasoning” models - that is, a model which shows it’s ‘thought process’ when considering an input and before constructing an output. This grants people a window into what’s going on inside the model, something that until now has been largely omitted from GPT-like models and platforms.

Importantly, it’s also one of the first models that competes on several metrics with some of the more recently available commercial models - and impressively, costs far less to train on less hardware than some of those same commercial models.

This opens a whole arena in self-hosted LLM products - and being open source, DeepSeek can expect that their model will quickly be adopted by the AI community, likely leading to greater and faster innovations across the industry.

Faster, cheaper, better LLMs. That’s why DeepSeek-R1 has taken the world by storm.

I’m sold. How can I use it?

Cloud Platforms

DeepSeek-R1 is available all across the internet, with several Cloud platforms already offering guides and services to get DeepSeek spun up within their infrastructure.

While utilising a managed model may seem like the easy way to get going, the underlying infrastructure can get expensive very quickly. Even though DeepSeek-R1 has versions that are intended to be run on fairly lightweight computers, the larger version can eat up hundreds of GB of memory! Your cloud bill at the end of that month is likely not one that you’ll cherish!

You also have the added challenge of getting any data you may want to use with DeepSeek-R1 to the model itself. Of course, it’s not too hard to upload a few text files and run them through the model for some insights - but what if you want to run your logs or some of your business datasets through it? It’s not always possible to get the assurances and security necessary to upload private data to a cloud platform to run it through an AI model - especially if you don’t know where that data may end up.

Locally

Another alternative is to run it locally. With something like Ollama, you can quickly run DeepSeek-R1 on your personal computer (provided it’s not too old, and has a decent array of specs). This is perfect for tinkering, but running the model locally means that if you want to build it into any product or service, it’s pretty much stuck in your laptop.

Not ideal if you plan on ever taking your laptop outside of the house with you…

So, what can we do about that?

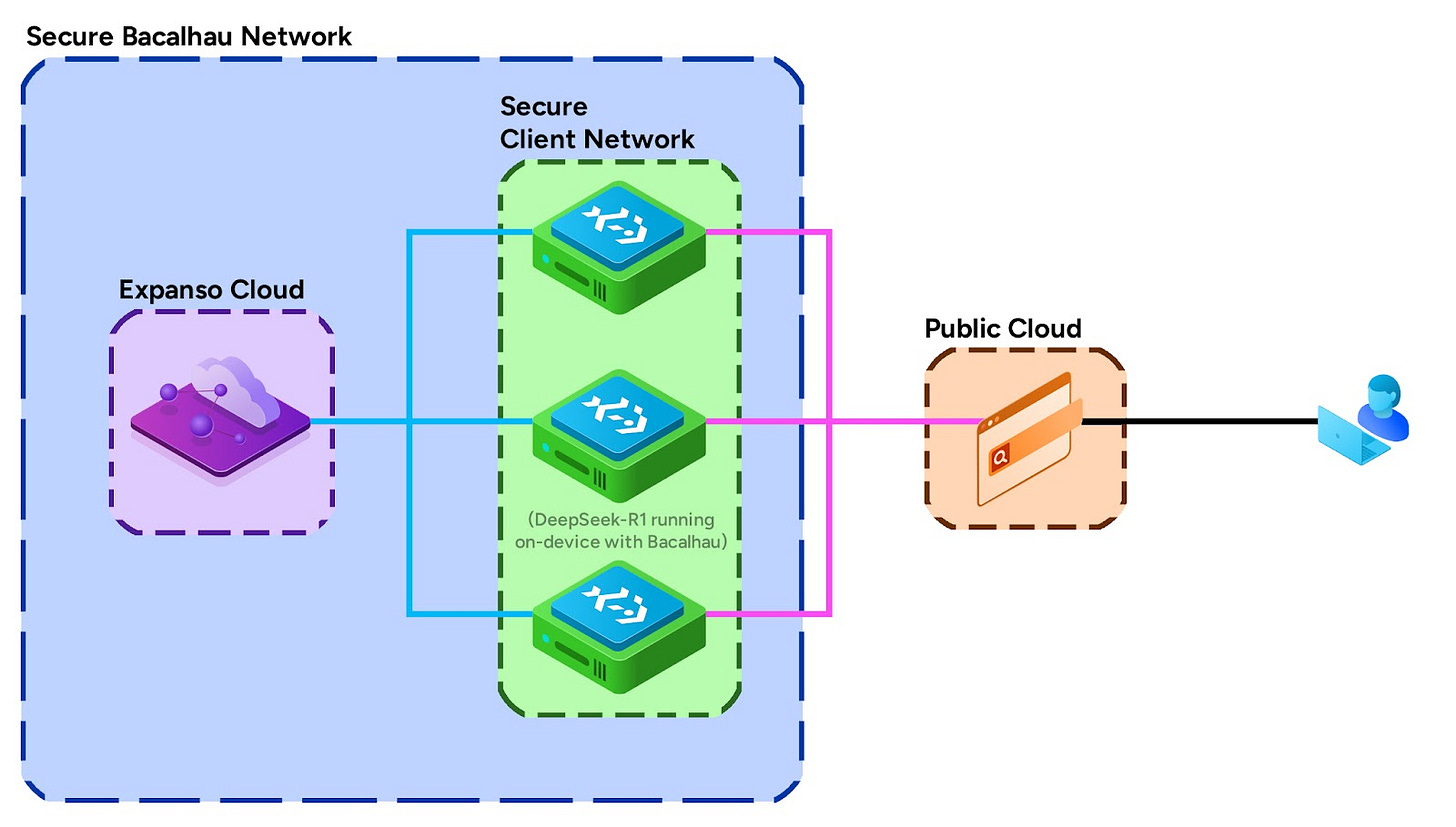

Well, there is a way to have the best of both worlds. To run DeepSeek-R1 on your own infrastructure next to your data, but still be able to interact with it over the internet.

And that’s through Compute over Data (CoD) with Bacalhau! In fact, we recorded a demo showing exactly how you could run open source LLMs on your existing infrastructure with just a single CLI command:

But why is this approach any better than running a managed service or hosting R1 locally? Well, there’s a bunch of reasons and advantages:

Your data, secure on your hardware

With Bacalhau, you can deploy an LLM to any system that you want to work with AND work directly with the data on that system without the risk or worry of data inadvertently being accessed in ways that it shouldn’t be.

With Bacalhau, you can specify exactly what kind of data you want your model to be able to access, and whether or not it should be be able to send that information somewhere else, or nowhere else at all.

No more worrying about 3rd parties getting a hold of your information!

Maximum utilisation of your existing systems:

It may be tempting to spin up some new infrastructure to try out the latest in LLMs with your data. But, in all likelihood, you already have a tonne of compute available to you to spin up these models - your existing infrastructure!

With Bacalhau, deploying your models to your existing hardware gives you the ability to maximise the utilisation of your compute without any additional infrastructure costs for your explorations and applications.

One interface, any kind of model:

With Bacalhau, you get a single unified interface for deploying any kind of model you want. While DeepSeek-R1 has piqued a great deal of interest in the wider technology community, it may not necessarily be the model you want to adopt for your applications in the long-run.

If you do decide to switch out the model you use, or want to use a bunch of them simultaneously, it gets tricky managing those workloads and all of that infrastructure over time.

With Bacalhau, you can run models alongside one another on the same, or different datasets across your infrastructure. Whether you want to run your models in the Cloud, on-premises or some mix of both, the way you do it is exactly the same! No more struggling to figure out what’s running where, or how!

How does it work?

Getting started with LLMs on a Bacalhau Network couldn’t be simpler. By simply installing Bacalhau on the systems that you would want to run LLMs on, you can deploy any containerised application to those systems in a matter of seconds.

For LLMs, we’ve put together a demo application that we’ll be releasing next week to give people a real quick-start with DeepSeek-R1 on a enterprise-grade distributed compute system.

With an Expanso Cloud Managed Orchestrator sitting at the heart of your Bacalhau network, deploying DeepSeek-R1 is as simple as running a single Bacalhau CLI command.

Once on your system, Bacalhau creates a bi-directional connection between you and your data enabling you to interact with DeepSeek-R1 as though it were a managed model operating somewhere in the Cloud - but is actually running on your hardware, with your data.

That’s great! How can I get started?

While we’re dotting the I’s and crossing the T’s on our DeepSeek-R1 demo, you can check out the first version running with Llama 3.2 that we demonstrated in our most recent Community Hour session.

We’re also giving a talk about the benefits of running LLMs with Compute Over Data in partnership with ODSC on the 19th of February. If you want to get the deep-dive and have the opportunity to ask questions, sign-up and join us!

Conclusion

Very few would deny that we live in exciting and interesting times with AI - and Large Language Models are one of the most impressive and adaptive applications of AI to come to the fore over the last decade.

That said, there’s still a tonne of things to work out. How do we keep our data secure? How do we simplify model development and deployment? How can we can scale LLMs to work with our existing products and customers?

All of these challenges can be tackled by Bacalhau, and we’re very much looking forward to sharing our knowledge and insights with you.

Get Involved!

We welcome your involvement in Bacalhau. There are many ways to contribute, and we’d love to hear from you. Please reach out to us at any of the following locations.

Commercial Support

While Bacalhau is open-source software, the Bacalhau binaries go through the security, verification, and signing build process lovingly crafted by Expanso. You can read more about the difference between open-source Bacalhau and commercially supported Bacalhau in our FAQ. If you would like to use our pre-built binaries and receive commercial support, please contact us!

Subscribed