Bacalhau v1.7.0 - Day 2: Scaling Your Compute Jobs with Bacalhau Partitioned Jobs

(05:18) Distributed, parallelized workloads just got even easier!

This post is part of the 5-days of Bacalhau 1.7.0 series. Be sure to go back and catch any that you missed!

We read it every day: big data is everywhere and growing constantly. Companies are sitting on goldmines of data–both their own data and data they’ve retrieved from elsewhere. However, the amount of data is often too large for a single instance. In the mid-2010s, the IT community adopted parallel machines to accelerate workloads by increasing throughput. It was able to do this by leveraging innovations such as Docker and Kubernetes, but the approach was typically limited to a single data center and compute-focused. To this day, the community needs more flexibility.

The most pressing challenges include:

How do you split your data so that each node can work efficiently?

How do you tell each node exactly which part of the data it is responsible for?

What happens if one of the computing nodes fails?

What happens when part of your processing fails?

How do you ensure consistency and reliability?

Fear no more! The new Partitioned Jobs feature in Bacalhau v1.7.0 addresses these challenges.

What are Partitioned Jobs?

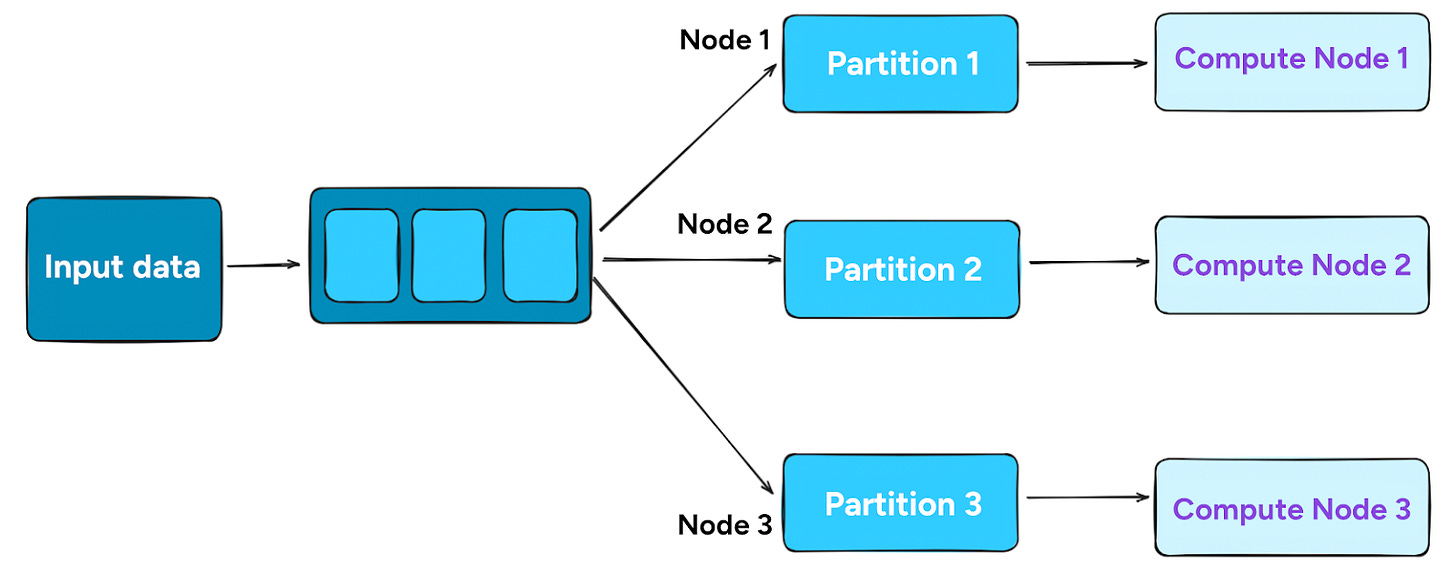

When processing large datasets, splitting the work across multiple nodes can significantly improve performance and resource utilization. Bacalhau's new Partitioned Jobs feature makes this process straightforward by:

Distributing work across a compute pool

Managing partition assignments and tracking

Handling failures at a partition level

Providing execution context to each partition

Core Features

Let's look at the core features of Partitioned Jobs.

1. Partition Management

Bacalhau v1.7.0 manages the two key aspects of partition management: distribution and independent execution.

Distribution

When you specify multiple partitions, Bacalhau creates N partitions (0 to N-1) and assigns them to available compute nodes that match the data and other specified constraints.

Bacalhau maintains consistent partition assignments throughout the job lifecycle, and it ensures that each partition finishes correctly.

Independent Execution

Each partition runs independently, can be processed on different nodes, and manages its own lifecycle and error handling.

2. Error Handling and Recovery

A key strength of the Partitioned Jobs feature is its approach to error handling because partition-level isolation contains failures in individual partitions. This enables the system to continue processing while the failed partitions recover.

For example, let’s say you have a job subdivided into five partitions. Running a partitioned job in Bacalhau will generate an output like the following:

Job with 5 partitions:

Partition 0: ✓ Completed

Partition 1: ✓ Completed

Partition 2: ✓ Completed

Partition 3: ✗ Failed -> Scheduled for retry

Partition 4: ✓ Completed

In this case, Partition 3 failed. However, the system automatically recovers. In a few minutes, the job status is as follows:

Job with 5 partitions:

Partition 0: ✓ Completed

Partition 1: ✓ Completed

Partition 2: ✓ Completed

Partition 3: ✓ Completed

Partition 4: ✓ Completed

Bacalhau automatically recovers and takes care of rescheduling, even over widely distributed systems. Neat!

3. Execution Context

Each job receives information about its responsibilities in the parallel execution through environment variables. For example, with every job execution, it has access to the following information:

BACALHAU_PARTITION_INDEX # Current partition (0 to N-1)

BACALHAU_PARTITION_COUNT # Total number of partitions

# Additional context variables

BACALHAU_JOB_ID # Unique job identifier

BACALHAU_JOB_TYPE # Job type (Batch/Service)

BACALHAU_EXECUTION_ID # Unique execution identifier

Thus, each node can make smart decisions about the data it needs from the pool and any special execution criteria. There is no need to check in with a central catalog or other jobs, resulting in more reliable and faster parallel execution.

Using Partitioning in Your Jobs

To use partitioning, specify the number of partitions when running your job. Here is how you can specify three partitions:

bacalhau docker run --count 3 ubuntu -- sh -c 'echo Partition=$BACALHAU_PARTITION_INDEX'

In the above code, the --count flag specifies the number of partitions. Thus, this is the actual trigger of the partitioning.

Here is how to do the same via YAML:

# partition.yaml

Name: Partitioned Job

Type: batch

Count: 3

Tasks:

- Name: main

Engine:

Type: docker

Params:

Image: ubuntu

Parameters:

- sh

- -c

- echo Partition=$BACALHAU_PARTITION_INDEX

To run the YAML file, execute the following:

bacalhau job run partition.yaml

That’s it! Bacalhau automatically takes care of scheduling and everything else.

Technical Benefits

Bacalhau's partitioning feature offers significant technical advantages for processing large datasets and compute-intensive tasks:

Enhanced performance and scalability: By distributing work across multiple nodes, partitioning enables horizontal scaling and parallel processing. This approach eliminates any constraints related to I/O and memory bandwidth of a single machine, maximizing utilization and reducing processing time.

Increased reliability and resilience: Partitioning provides granular failure recovery by isolating errors in individual partitions. If one partition fails, the system continues processing the other partitions without interruption. Bacalhau's built-in retry mechanism ensures that failed partitions are automatically rescheduled, enhancing the resilience of your jobs. This approach preserves results from completed partitions, preventing unnecessary reprocessing.

No rewriting code: Your data processing jobs do not require a new SDK. If you're already using WASM or containers (or just about any other execution environment), we support it!

Getting Started with Partitioned Jobs

If you’d like to try this example on your own, dive right in! Install Bacalhau and give it a shot.

By the way, if you don’t have a network and you would still like to try it out, we recommend using Expanso Cloud. Also, if you'd like to set up a cluster on your own, you can do that too (we have setup guides for AWS, GCP, Azure, and many more 🙂).

What's Next?

Start processing your data today:

Identify your natural data groupings (dates, regions, categories)

Choose the matching partition strategy

Let Bacalhau handle the distribution

Ready to simplify your distributed data processing? Check out our documentation for more examples and detailed guides.

Join our community to share your data processing stories and learn from others!

Get Involved!

We welcome your involvement in Bacalhau. There are many ways to contribute, and we’d love to hear from you. Please reach out to us at any of the following locations.

Commercial Support

While Bacalhau is open-source software, the Bacalhau binaries go through the security, verification, and signing build process lovingly crafted by Expanso. You can read more about the difference between open-source Bacalhau and commercially supported Bacalhau in our FAQ. If you would like to use our pre-built binaries and receive commercial support, please contact us or get your license on Expanso Cloud!