Anonymize and Process Sensitive Data with Bacalhau

(5 min)

The twin threats of escalating data breaches and intensified regulatory scrutiny are converging into a perfect storm for businesses. The first half of 2024 saw a jaw-dropping 409% increase in data breaches in the U.S. alone. Notably, attacks on financial service companies skyrocketed by 67% year-over-year, with healthcare attacks close behind.

In addition to security vulnerabilities, ramped-up enforcement of regulatory frameworks such as the General Data Protection Regulation (GDPR) is adding pressure to data processors to keep their data secure. In 2024, companies faced a record-breaking €2.1 billion in fines from the GDPR alone.

With increased scrutiny from regulators and an ever more determined wave of cyber adversaries, organizations are racing to improve data privacy and ensure compliance.

Bacalhau: A Key Factor for Better Data Privacy

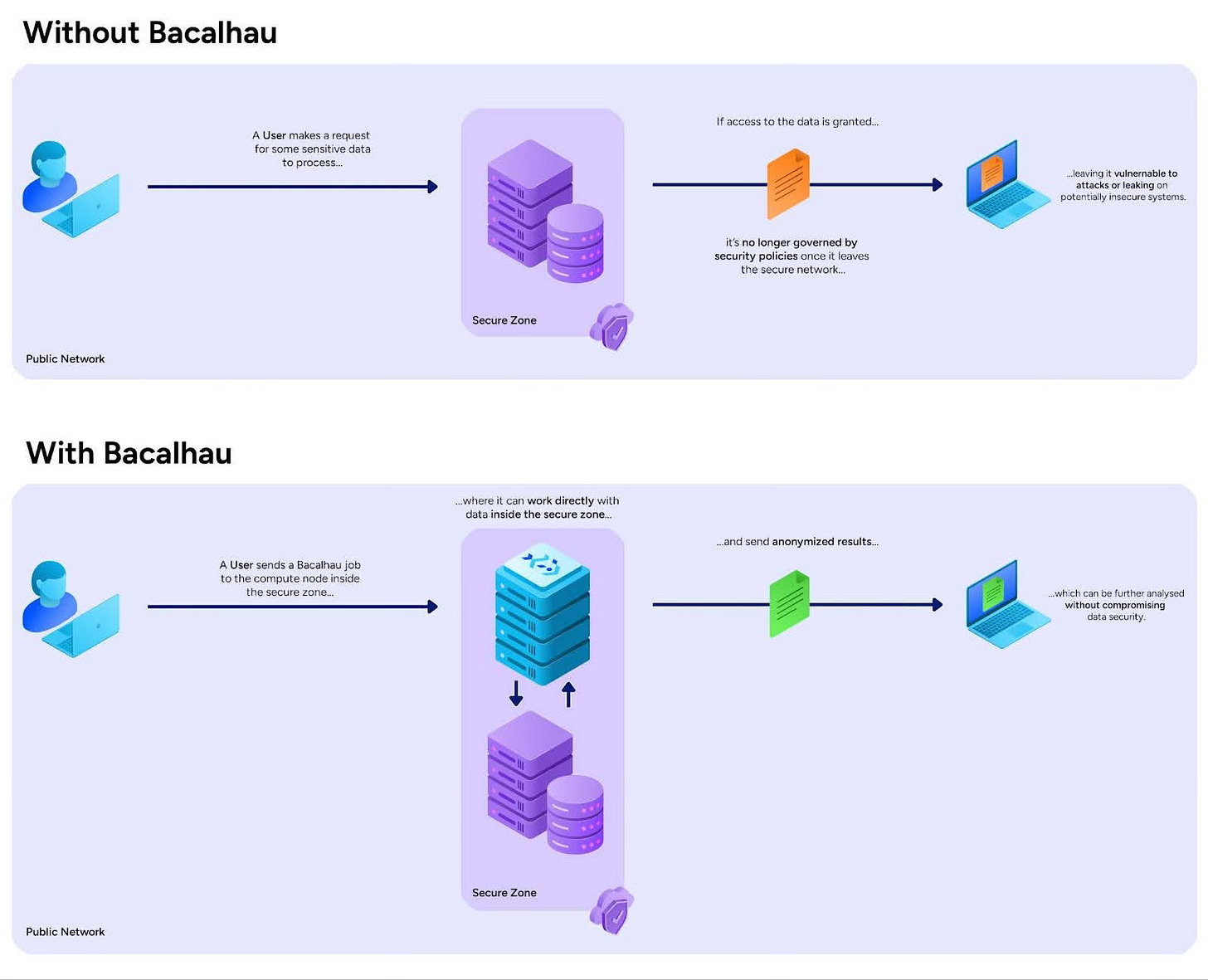

Bacalhau, the distributed compute-over-data platform, is designed to minimize data movement by executing jobs where data is located, improving data security. This localized processing means sensitive data can be processed and analyzed in place, allowing Personal Identifiable Information (PII) to remain governed and protected within its original, secure, and regulated environment.

To that end, Bacalhau opens up the possibility of implementing data sanitization systems, ensuring that PII is anonymized before leaving its generated environment, thus complying with industry data localization regulations.

Data Anonymization Workflows

You can create custom scripts and workflows to anonymize your data based on the unique data structure within your systems. Hashing algorithms, used in frameworks such as Neosync, can help anonymize sensitive data efficiently. Using Bacalhau, you can extend these anonymization processes across all of your data-generating systems and edge devices, ensuring that only processed and anonymized data is moved.

Figure 1: Architecture diagram of your data anonymization workflow with Bacalhau

As an example, smart meters collect large amounts of user data, which can raise data privacy concerns. If an unauthorized third party accesses this data, they could use it to learn about users' locations, activities, and lifestyles. While this sensitive data is important for some internal operations, most use cases do not need the full details, and so it can safely be anonymized, and sensitive data can be removed, without impacting the business.

Reducing Your Risk with Bacalhau

So, how can Bacalhau help keep data secure, whilst still enabling people to build the tools and services that benefit from user data at scale? Anonymize your user’s data!

We’ve put together a short example to demonstrate how you can create a data anonymization workflow in just a few minutes to do exactly that.

In this example, we’ll use AWS to spin up a few EC2 instances, each with scripts that will demonstrate example PII information, and integrated into a Bacalhau network.

Figure 2: Some of the sample data generated on each node

This setup will enable us to execute your data anonymization workflows as Bacalhau Jobs across all instances, either in part, or simultaneously.

Spinning Up EC2 Instances

To make this process as quick as possible, we’ve published an example repo for creating and provisioning a private Bacalhau network with example data generating AWS instances.

To get started, clone this GitHub repository to access the example scripts.

Install the AWS CLI and configure your credentials within your environment. Once that’s all setup, the example scripts in our cloned repo will connect to your AWS account to execute commands and create AWS resources on your behalf.

To start the instance creation process, navigate to the repository folder `examples/PII-anonymization` and execute the following command:

python createinstance.pyThis script creates a key pair, security group, and four EC2 instances. One instance will be tagged as a requester node, while the other instances will be compute nodes with PII-generating scripts launched at startup. Take note of the requester node ip address printed out with this command.

Once the script has finished running, we’ll now have a private Bacalhau network running which we can run Bacalhau jobs on.

Execute Bacalhau Job

You can execute Python workflows directly on your Bacalhau network, easily leveraging Bacalhau's extensibility without the need for extensive refactoring processes.

Here is a data cleaning and PII anonymization script:

import pandas as pd

import hashlib

# Create a DataFrame

df = pd.read_csv('data.csv')

# Use a lambda function to apply hashing to each row

df['LCLid'] = df['LCLid'].apply(lambda x: hashlib.sha256(x.encode()).hexdigest())

df.to_csv('anonymized.csv', index=False)This script processes your sample server data and anonymizes the fields that you have identified as containing PII. You can then send this processed data to downstream locations such as an S3 bucket, database, or queue.

To execute your Bacalhau Job, first get your Requester Node IP address, which was printed with the `createinstance` script. Then, run the following commands which set the Requester Node's IP address and runs your Python-based Bacalhau job:

bacalhau config set node.clientapi.host "<YOUR REQUESTER IP>"

bacalhau exec python --code cleanpii.py --job-type ops --publisher localRetrieve Anonymized Data

Once these jobs run on our Bacalhau nodes, the PII data will have been sanitized and ready for downstream processing. Sensitive data has been removed complying with public anonymity and privacy regulations, reducing the potential for interception and leakage outside of a secure environment.

To view some of this data, you can download your job results..

# Get Job ID

bacalhau job list

bacalhau job get <YOUR_JOB_ID>After the download has finished we can see the anonymized csv file in the results folder.

Figure 3: Processed and Anonymized data

Once you’ve finished exploring our example, please don’t forget to clean up your created AWS resources! There is a helper script in the repository that terminates the EC2s spun up during this tutorial.

python terminateinstances.pyConclusion

Data is more valuable than ever, but so are the risks of mishandling or exposing sensitive information. As our ability to collect and analyze data grows, the potential attack surface for adversaries expands as well. Organizations that want to make the most out of their data will have to balance the importance of end user security with the risk of missing timely key insights.

Bacalhau offers a solution that helps you strike this balance. By executing your workloads within secure environments, Bacalhau ensures you have a single, governed point of interaction for your sensitive data. With Bacalhau’s Compute Over Data approach, you can harness the power of your data while maintaining the highest standards of privacy and security.

How to Get Involved

We welcome your involvement in Bacalhau. There are many ways to contribute, and we’d love to hear from you. Please reach out to us at any of the following locations.

Commercial Support

While Bacalhau is open-source software, the Bacalhau binaries go through the security, verification, and signing build process lovingly crafted by Expanso. You can read more about the difference between open-source Bacalhau and commercially supported Bacalhau in our FAQ. If you would like to use our pre-built binaries and receive commercial support, please contact us!

Subscribe for free to receive more updates.